TL;DR

Istio is an open-source service mash that can layer over applications. Studying CVE-2021-34824 in Istio will allow us to dive into some concepts of Istio and service meshes in general. We will also learn how to debug Istio and go around the code to understand and find vulnerabilities.

Istio is an open-source service mesh that can layer over applications. It’s a very popular project that has more than 30k stars on GitHub. Istio takes care of the control-plane, data-plane, service-to-service authentication and load balancing, letting the application code focus on its main objective: the application’s features. Istio’s control-plane runs on Kubernetes.

“An Istio service mesh is logically split into a data plane and a control plane.

The data plane is composed of a set of intelligent proxies (Envoy) deployed as sidecars. These proxies mediate and control all network communication between microservices. They also collect and report telemetry on all mesh traffic.

The control plane manages and configures the proxies to route traffic.”

Quote from (https://istio.io/latest/docs/ops/deployment/architecture/)

In this article, we will dive into CVE-2021-34824. This CVE was disclosed by a research team at Sopra Banking Software (Nishant Virmani, Stephane Mercier and Antonin Nycz) and John Howard (Google). The impact score of this vulnerability is high (8.8); it allows Istio Gateways to load private keys and certificates from Kubernetes secrets in other namespaces.

Studying this vulnerability will allow us to dive into some concepts of Istio and service meshes in general. We will also learn how to debug Istio and go around the code to understand vulnerabilities.

We will first explain Istio’s main concepts and Istio Gateways and then describe how to reproduce the bug and analyze the behavior and the fix.

Main Concepts of Istio

Istio is a service mesh. This means it is a dedicated infrastructure layer that controls communication between services over the network. This enables developing an app in separate parts that communicate with each other over the network.

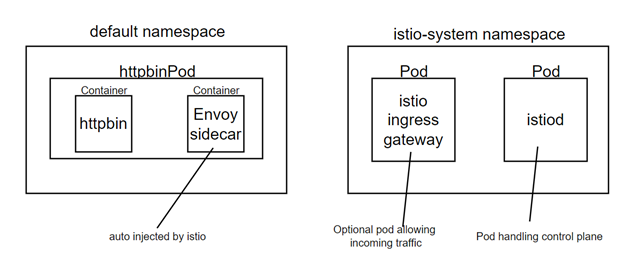

As a service mesh, Istio takes care of the control plane and the data plane. When you run a clean install of Istio with the default profile, you get the following pods in your Kubernetes cluster:

- Ingress-gateway: It is a pod that is able to route queries from outside of your cluster to pods that are inside of it.

- istiod: This is the core of Istio. It runs a program called pilot-discovery which will take care of the control plane of your service mesh.

Istio works with a program called Envoy. Envoy is an open source edge\middle\service proxy. It is a sidecar proxy, so every pod of your application will be running two containers. The first container will be your application’s container, and the second container will be a sidecar proxy. This proxy takes care of the data plane by forwarding your requests to the right node, the right pod and the right service. Istio allows you to automatically mount envoy sidecar containers to all your app’s pods by running the following command:

kubectl label namespace default istio-injection=enabled

This will automatically inject a sidecar container to every pod that is created in the default namespace.

Figure 1: Basic infrastructure example using Istio

You can test this feature by mounting the httpbin app, which is available in Istio’s examples. It runs a simple http server that we will be able to query for our tests.

Discovery Service (XDS)

By default in Envoy, the information about the different resources (services, pods, clusters …) available in your cluster is made available to envoy via static configuration. However, you can define it dynamically by using an Envoy service called XDS (X Discovery Service, X meaning cluster, service, secret, listener …).

When your app needs to talk to a service, it will send the packet to the sidecar proxy. The sidecar proxy will ask an XDS server for the service (and which IP it should reach). The XDS server will send a response, and the sidecar will send the packet to the given IP. The part of the XDS server that is interesting for us in this post is the SDS service, which handles secrets (mainly certificates in Istio).

In Istio, the XDS server is implemented inside the istiod pod. Istio adds some features to the default XDS API specification, such as secrets caching.

Gateways

Now that we have a service mesh running with sidecar proxies, we need to make it accessible from the outside world. For now, our httpbin pod is only accessible from inside our cluster. To make it reachable from the outside world, we need to create a Gateway. Gateway is an Istio feature that is very similar to Kubernetes’ native Ingress, with some more advanced features such as advanced routing rules, rate limiting or metrics collection.

Gateway creation is pretty easy and described here. If we focus on the actual gateway creation, we see that we create two different resources in Kubernetes — a Gateway and a VirtualService — that are specific to Istio.

The Gateway resource will allow to use Istio’s Ingress gateway (which has been created with the default installation of Istio) for our application. It listens on port 80 of the Ingress Gateway for the hostname “httpbin.example.com” using the HTTP protocol (in Istio’s example).

Now that we have a working Gateway, the VirtualService will make the connection to the app by defining routing rules. In our case, the VirtualService tells to forward the requests that match the prefix /status and /delay to port 8000 of our httpbin service.

Note that this whole architecture is based on Istio’s ingress gateway that has been created when installing Istio in the Istio-system namespace. To reproduce the vulnerability, we will need to create another ingress in another namespace.

CVE-2021-34824

Description

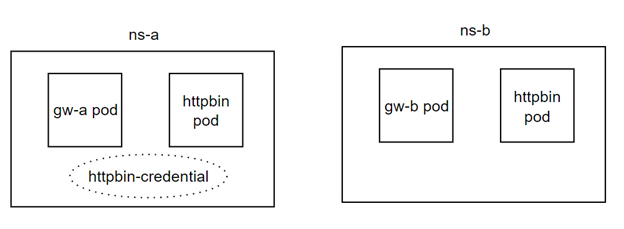

According to Kubernetes, a pod should only get access to secrets in its own namespace, unless a specific RBAC rule states otherwise (source). In the case of this vulnerability, we have two Istio Gateways in two different namespaces: gw-a in ns-a and gw-b in ns-b. To serve with SSL, gw-a has access to a certificate called https-cert, stored in Kubernetes secrets in ns-a. The vulnerability allows gw-b to access https-cert, although it should not be authorized because gw-b is in ns-b.

Figure 2: Architecture for reproducing the bug

The reason this is possible is because the container gets the secret by querying the SDS (the part of the XDS server which is responsible for secrets). The SDS also uses some cache to provide faster responses. This cache had an implementation bug that allowed this vulnerability.

Reproducing the vulnerability

To reproduce the vulnerability, we need to create two namespaces:

kubectl create namespace ns-a kubectl create namespace ns-b

We then need to create Istio operators for our gateways inside ns-a and ns-b.

apiVersion: install.istio.io/v1alpha1 kind: IstioOperator metadata: name: gw-a spec: profile: empty # Do not install CRDs or the control plane components: ingressGateways: - name: gateway-a namespace: ns-a enabled: true label: # Set a unique label for the gateway. This is required to ensure Gateways # can select this workload istio: gw-a values: gateways: istio-ingressgateway: # Enable gateway injection injectionTemplate: gateway

We can apply the same yaml file by replacing gw-a and ns-a by gw-b and ns-b. Make sure to deploy them using istioctl:

istioctl install –f gwa.yaml

You now have two gateways deployed in two different namespaces. You can check them by looking at the list of pods in your namespaces.

You can then deploy the httpbin app in both namespaces to perform our tests (using the sample yaml file in Istio’s install folder).

Let’s now create our gateways and virtual services to link the apps to the gateways:

apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: gw-a spec: selector: istio: gw-a servers: - port: number: 443 name: https protocol: HTTPS tls: mode: SIMPLE credentialName: https-secret hosts: - httpbin.example.com

Note: Make sure to specify the right namespace when applying the yaml files.

We now need to deploy our httpbin app in both namespaces and link it to their gateways with a virtual service. You can use the files from Istio’s samples to deploy httpbin. Then, create the VirtualService:

apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: httpbin spec: hosts: - "httpbin.example.com" gateways: - gw-a http: - match: - uri: prefix: /status - uri: prefix: /delay route: - destination: port: number: 8000 host: httpbin

We now have our apps and gateways up and running. We need to create the secret as shown in this link. Make sure to call it https-secret when loading it into Kubernetes and put it only in ns-a.

Finally, to check that the secret was successfully loaded into gw-b, you can run the following commands:

export SECURE_INGRESS_PORT=$(kubectl -n ns-b get service gw-b -o jsonpath='{.spec.ports[?(@.name=="https")].nodePort}')

export INGRESS_HOST=$(minikube ip)

curl -v -HHost:httpbin.example.com --resolve "httpbin.example.com:$SECURE_INGRESS_PORT:$INGRESS_HOST" --cacert example.com.crt "https://httpbin.example.com:$SECURE_INGRESS_PORT/status/418"

You should get a status 418 response, although the certificate should not be accessible from this gateway.

CVE Analysis

This vulnerability is because Istio implements an SDS cache that enables secrets to load faster. In versions 1.10.0 and below, the cache would only take the name of the secret. Thus, a secret could be retrieved by any gateway from any namespace.

The fix was done in this commit adding the resource type and namespace in the cache key to the secret. The fix has now been moved to another place.

Thanks to this information, I started debugging the application using this link.

I wanted to understand how this part of the code is reached to fully understand it and see if we could play with the new parameters that are added in the cache keys. Since Istio’s codebase is quite big and events are triggered from many places, I decided to go for debugging and putting a breakpoint on the fix itself to see exactly when and how it is triggered and have a direct access to the callstack when it happens.

The tough part for me when trying to debug Istio was loading the built docker images into Kubernetes. Indeed, since I was using Minikube in my environment, I faced some issues when using Minikube’s environment variables while building the images.

Thus, the easiest way I found was to push the images I built into Docker hub.

After modifying the Dockerfile.pilot file as shown in the tutorial, I ran all the building commands with the variable: [HUB=<your_docker_hub_id>].

make DEBUG=1 build make docker HUB= DEBUG=1 make docker.push HUB="<your_docker_id>"

Then install the modified version of Istio:

istioctl install --set HUB="<your_docker_id>"

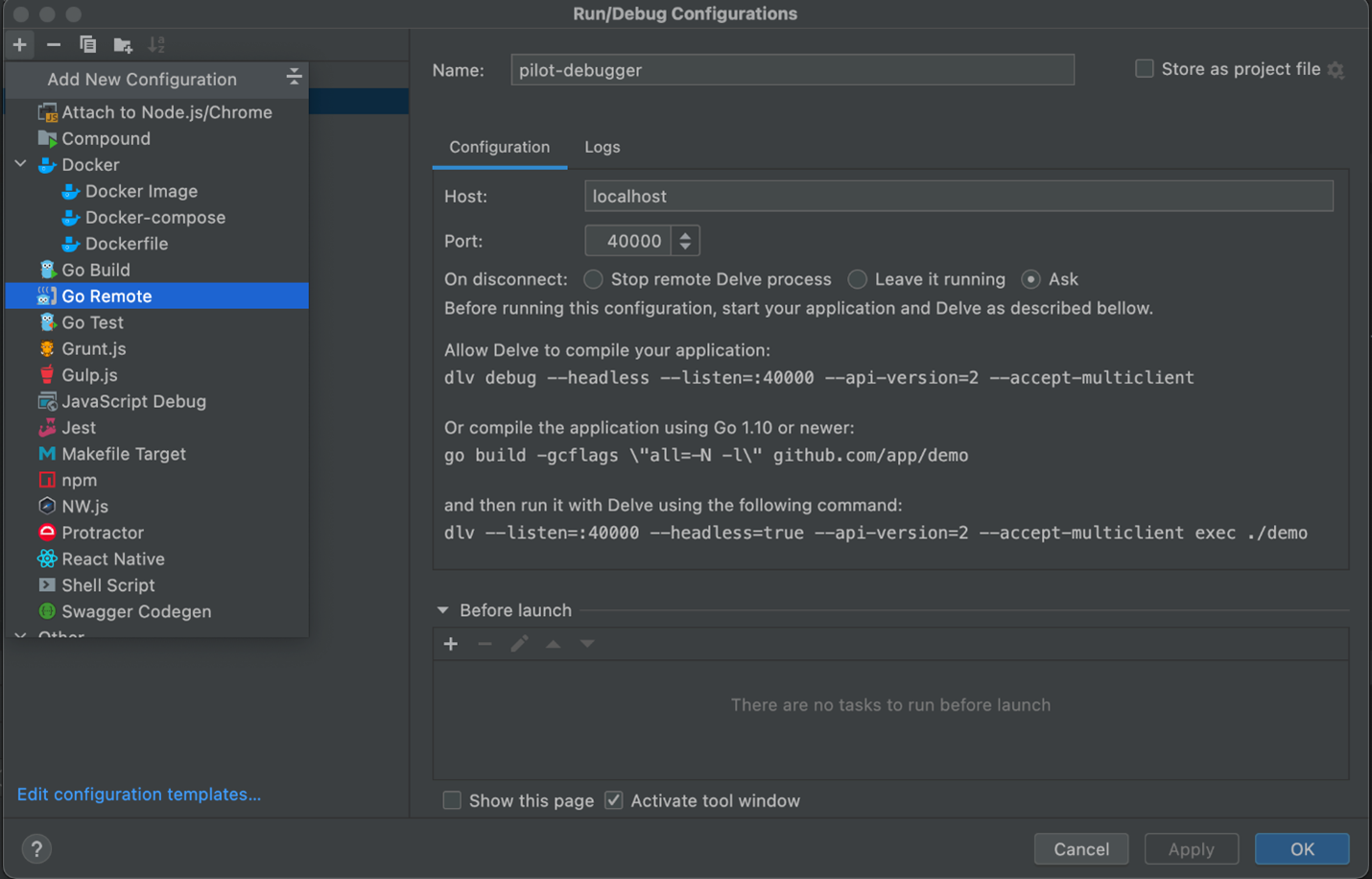

I decided to remotely debug Istio using Goland as shown in the tutorial.

Figure 3: GoLand configuration for remote debugging pilot

I was able to put a breakpoint at the line of the fix and start playing with it. One thing was surprising at first: when I was reaching the app through the gateway, the breakpoint wasn’t reached. Actually, the breakpoint is hit only when creating or deleting the secret with kubectl or when deploying the Gateway itself.

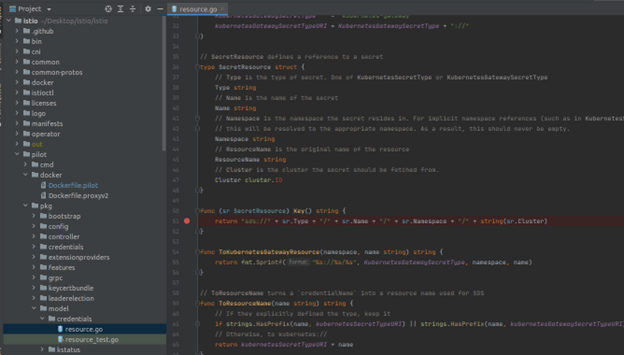

Figure 4: Breakpoint on the vulnerability fix

When creating the Gateway in the wrong namespace, the secret is not reachable, thanks to the fix. While the secret is not reachable, the breakpoint gets hit a lot —every few seconds. The Gateway is actually trying to reach istio for the secret until it finds it.

By observing this behavior, I was able to state that the secret was stored at some place in the Gateway and was only retrieved by the Gateway at Gateway creation. Thus, once the secret is loaded to the Gateway, the fix isn’t reached anymore because the secret stays inside the Gateway. On a more general note, this bug shows us how important it is to handle cache properly, especially for information as sensitive as secrets. It is crucial, whenever there is cache involved, to verify who can access this cache when and how. In our case, any Gateway that would know the name of the certificate could access it. Thanks to the fix, the secret is now only accessible for secrets in the right namespace, cluster and with the right type. This fits exactly the security requirements for such a secret because Kubernetes allows, by default, any pod in a given namespace to access the secrets within that namespace.

“Kubernetes Secrets are, by default, stored unencrypted in the API server’s underlying data store (etcd). Anyone with API access can retrieve or modify a Secret, and so can anyone with access to etcd. Additionally, anyone who is authorized to create a Pod in a namespace can use that access to read any Secret in that namespace; this includes indirect access such as the ability to create a Deployment.”

(https://kubernetes.io/docs/concepts/configuration/secret/#details)

Summary and Closing Notes

This vulnerability allowed us to get into the world of Istio and better understand the concepts behind a service mesh and Istio Gateways. We were also able to learn how to edit the Istio codebase and debug it. It allowed us to get a deeper understanding of how Istiod shares certificates with Istio Gateways.

In addition to all the Istio knowledge acquired, this research underlines the importance of handling cache the right way. Cache can be a major source of information leakage when implemented in a bad way. It is crucial to make sure that cache is properly implemented to avoid these types of vulnerabilities.