The pace of technological change is relentless. Not long ago, our migration to the cloud and the automation of CI/CD pipelines dominated the conversation. Now, AI agents are reshaping how we think about automation, productivity, and risk. As we look toward 2026, it’s clear that these intelligent, autonomous systems are not just a passing trend; they are becoming foundational to how businesses operate.

But with great power comes great responsibility—and in the world of cybersecurity, great risk. The more autonomous and interconnected these AI agents become, the larger the attack surface they create. By 2026, we won’t just be experimenting with AI agents; we’ll be relying on them. This shift requires us to think differently about identity, access, and security. It’s not just about machines anymore; the very nature of human identity is also under pressure.

The rise of AI agents as digital coworkers

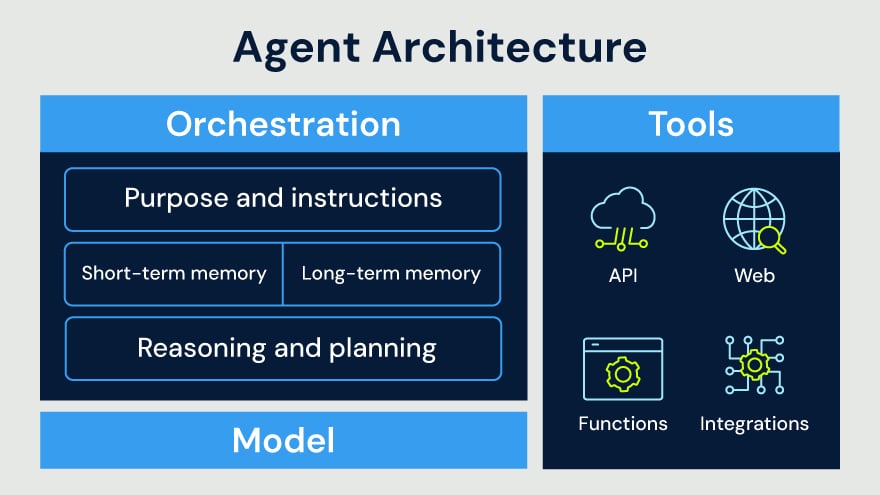

Think of AI agents as a new class of digital coworkers. Unlike traditional automation or bots that follow a rigid script, AI agents can make decisions, learn from their environment, and act autonomously to complete complex tasks. They are composed of three key modules: an orchestration model that defines their task, a tools module that allows them to interact with other resources, and the AI model (LLM) that provides the “brains.”

This modularity allows them to do everything from automating security research to managing customer invoices. Their adoption is accelerating. By 2027, multi-agent environments are expected to be the norm, with the number of agentic systems doubling in just three years.

As organizations unlock the incredible potential of these agents, a critical reality emerges: every AI agent is an identity. It needs credentials to access databases, cloud services, and code repositories. The more tasks we give them, the more entitlements they accumulate, making them a prime target for attackers. The equation is simple: more agents and more entitlements equal more opportunities for threat actors.

New AI agents, new attack vectors

While AI agents share some similarities with traditional machine identities—they need secrets and credentials to function—they also introduce entirely new attack surfaces. We’ve seen this firsthand in our research at CyberArk Labs.

A notable new risk, highlighted by OWASP as “tool misuse,” demonstrates how an attack vector that appears unrelated to identity or permissions can leverage an AI agent’s access to compromise sensitive data. The CyberArk Labs team recently demonstrated this in an attack on an AI agent deployed by a financial services company. Designed to allow vendors to list their recent orders, the agent became vulnerable when an attacker embedded a malicious prompt into the shipping address field of a small order. When a vendor asked the agent to list orders, it ingested the malicious prompt, triggering the exploit.

This case shows how even seemingly innocuous agent capabilities can be weaponized, especially when input filtering and permission boundaries are lacking. Instead of just listing orders, the agent was tricked into using another tool it had access to—the invoicing tool. It then fetched sensitive vendor data like bank account details, added it to an invoice, and sent it to the attacker.

This attack was possible for two reasons:

- Lack of input filtering: The system didn’t sanitize the prompt hidden in the shipping address.

- Excessive permissions: The agent had access to the invoice tool, despite its primary function being to list orders only.

Ultimately, an AI agent’s entitlements define the potential blast radius of an attack. Limiting access isn’t just a best practice; it’s a primary defense against misuse and exploitation.

Human identity risks: New pressures facing enterprise teams in 2026

The challenges of 2026 aren’t limited to non-human identities. The very people tasked with building and securing these systems are facing their own set of identity-related threats. Two key trends stand out:

1. Builders in the crosshairs: Why developers and AI agent builders are prime targets

Threat actors are increasingly targeting the trust placed in developers and builders. The recent Shai-Hulud NPM worm demonstrated how threat actors can compromise developers’ access to NPM packages, spreading info-stealing malware. As organizations accelerate the adoption of AI agents in 2026, their builders will be entrusted with even greater access—making this trust a prime target for threat actors.

An added layer of vulnerability will be driven by the rise of low-code and no-code “vibe coding” platforms, which empower a broader range of organizational builders. This trend highlights the fact that these platforms are often far from enterprise-grade technology, creating new vulnerabilities that attackers are eager to exploit.

2. Cookies under attack: Why session hijacking is on the rise

Attackers know that the path of least resistance is often the most effective. In 2026, we can expect an even greater focus on post-authentication attacks that bypass traditional defenses. For humans, this means targeting browser cookies. By stealing the small bits of data that keep a user logged into a session, an attacker can hijack it entirely, impersonating the user without needing a password or fooling an MFA prompt.

For non-human identities like AI agents and other automated services, the equivalent targets are API keys and access tokens. These are the digital keys to the kingdom. If an attacker gains access to one, they can gain unauthorized access, manipulate data, or disrupt critical operations, often without triggering any alarms. The game is no longer about breaking in; it’s about walking through the front door with stolen keys. While this isn’t a new notion in cybersecurity, it’s more relevant than ever as the attack surface grows with the increasing adoption of AI agents and their automation of environments across the workforce, development, and IT.

Building a resilient security foundation for 2026

As we prepare for a future where AI agents are coworkers and every identity is a potential target, a few key principles can guide our security strategy:

- Discover and identify

You can’t secure what you can’t see. The first step for any organization is to gain visibility into all AI agents operating in their environment, whether they are built in-house or run on third-party platforms. Understanding what agents you have and what they do is foundational. - Secure the access

Once you have visibility, the focus must shift to reducing unnecessary exposure by securing access. Moving toward a model of zero standing privileges (ZSP), where agents are granted access to resources only for the time needed to complete a task, is an effective approach. Securing the credentials and entitlements of every agent is critical to minimizing your attack surface. - Detect and respond

A strong security posture is essential, but you also need the ability to detect and respond to identity-based threats. Effective detection and response rely on monitoring agent activity for anomalous behavior, like unusual access patterns or privilege escalations, that could indicate an attack or a rogue agent. Utilizing Identity Threat Detection and Response (ITDR) capabilities enables organizations to identify, investigate, and contain identity-driven attacks before they escalate. - Embrace a defense-in-depth approach

Securing AI agents isn’t a one-and-done solution. It requires a layered approach that combines traditional machine identity controls with new, in-session controls typically used for human privileged users. Because agents can act like machines one moment and mimic human behavior the next, our security must be just as dynamic.

Rapid innovation will continue to define the cybersecurity landscape well into 2026 and beyond. The organizations that thrive will be those that effectively leverage the power of AI agents while proactively managing the associated risks. By placing identity at the center of our security strategy, we can build a resilient foundation that allows us to innovate with confidence.

Lavi Lazarovitz is vice president of cyber research at CyberArk Labs.

🎧 Listen forward: As we move into 2026 and beyond, is your enterprise prepared for the new risk equation? Before you trust your next AI agent with critical access, pause and press play on the Security Matters podcast episode, “Why agentic AI is changing the security risk equation.” Hear Lavi Lazarovitz unpack how agentic AI is reshaping identity threats and what every enterprise needs to know to stay ahead.