TL;DR

ByteCodeLLM is a new open-source tool that harnesses the power of Local Large Language Models (LLMs) to decompile Python executables. Furthermore, and importantly, it prioritizes data privacy by using a local LLM that you can run under any environment, like old laptops and VMs. ByteCodeLLM is the first decompile program that manages to decompile the latest versions of Python 3.13 locally. Its success rates are also 99% when running against older python versions, achieving a 70-80% accuracy rate depending on the LLM used.

How did we start?

Malware researchers around the world have been struggling in the last couple of years with analyzing malware written in Python above 3.8 that is compiled to byte code. With new major Python versions released every year, the internal representation and bytecodes change, breaking publicly available tools and reducing decompilation accuracy. Existing decompilers often fail to produce correct and usable code. For instance, almost a year ago we encountered the same limitation while trying to identify a specific API misuse for malicious purposes as part of a research project. We analyzed dozens of samples to track it down. Nearly half of the samples we examined were compiled from Python versions newer than 3.8. Existing decompilation tools (Uncompile6 and PyCDC) gave insufficient results, but this time we were in the era of LLMs and asked “Chaty” (a commercial LLM service) to try to do this task for us. Surprisingly, it worked!

However, privacy concerns prevented us from using state-of-the-art commercial cloud-based solutions in our workflow. Instead, we tried to find a local alternative. After a quick search, we found Ollama. We quickly wrote a script that leveraged Ollama and additional open-source resources and stitched it in with some Python code that we wrote — and it worked again!

We extracted better results than with any other alternative that we aware of, and now analysis that would have taken days is done in minutes. Since then, we’ve improved the script and developed it into a tool we call ByteCodeLLM, which we are happy to release as open source.

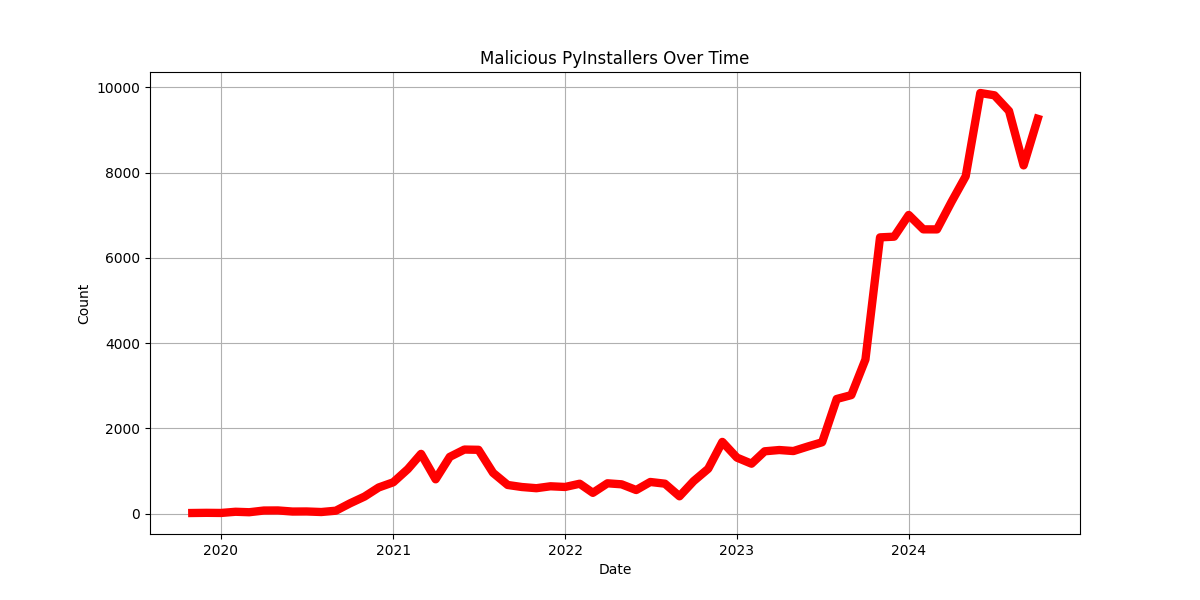

Figure 1: Pyinstaller Malware (10+ Positive Detections) From Virus Total Over Time

ByteCodeLLM marks a significant advancement in Python decompilation capabilities. This is particularly crucial, given the exponential growth of Python-based malware in recent years (Figure 1). By leveraging LLMs for bytecode conversion, we essentially eliminate the issue of completely failing to convert functions. While the output may occasionally lack precision — a limitation that can be an issue in some applications — it is far less critical in Python-based malware analysis. There, our primary goal is understanding functionality rather than producing runnable code.

Why use local LLMs and not a commercial LLM provider?

Generative AI is full of exciting possibilities, but we must proceed with caution and ensure it’s used responsibly. In our previous blogs we highlighted significant risks associated with large language models (LLMs), including remote code execution vulnerabilities and the potential for LLMs to generate misleading or false information (“LLM Lies”). As security researchers, we must proactively identify and mitigate these dangers when we integrate this new technology.

While most commercial LLM providers have privacy policies outlining their data handling practices, it’s crucial to remember that in some cases if you’re not paying for a service or paying only $20 a month, you are likely the product. This brings inherent risks:

- Training on user data: This is a major concern. Sharing unique or private data with an LLM could lead to it being incorporated into the model’s training data. This could inadvertently expose your information to other users or even become public knowledge.

- Data storage for testing and development: While seemingly legitimate, storing user data for testing and development purposes poses risks. Supply chain attacks and misconfigurations can expose this data to unauthorized access.

- Data retention: Most of the services do not specify how long they will retain user data. This potential indefinite retention period increases the risk of potential data leaks. The longer data is stored, the greater the chance of it being compromised.

- Privacy policies can change: Some AI providers add disclaimers that they may change the privacy policies in the future. It’s without prior notice ; this can be a good idea to periodically check for changes.

How does ByteCodeLLM work?

ByteCodeLLM addresses growing data privacy concerns by locally processing the sensitive information. This eliminates the need to share code with third-party AI providers (more on that later), empowering malware researchers to leverage the power of LLMs while retaining complete control over their data.

This approach addresses another key limitation: the inability to generate malicious source code accurately. Third-party AI providers prioritize security and alignment, implementing restrictions to prevent misuse, such as generating code for malicious purposes. While this is crucial for safety, it can hinder malware research efforts by occasionally blocking the creation of malicious code necessary for analysis. In contrast, using a locally hosted LLM often comes without these filters, offering greater flexibility for research purposes.

It’s important to note that as malware researchers, our primary focus is not achieving perfect decompilation. During the development of the tool, we prioritized functionality over accuracy, adding features directly supporting our research needs, such as ensuring the tool runs efficiently on CPUs and cross-platform support.

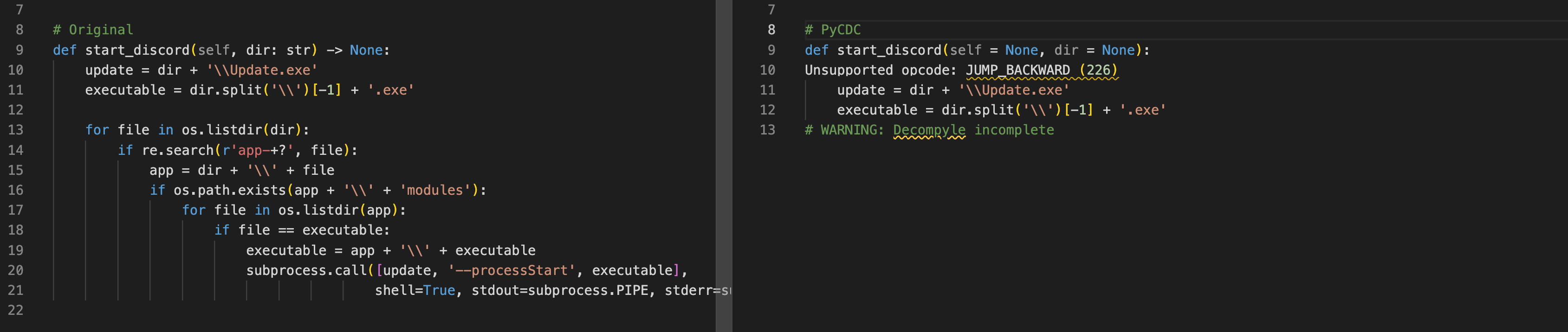

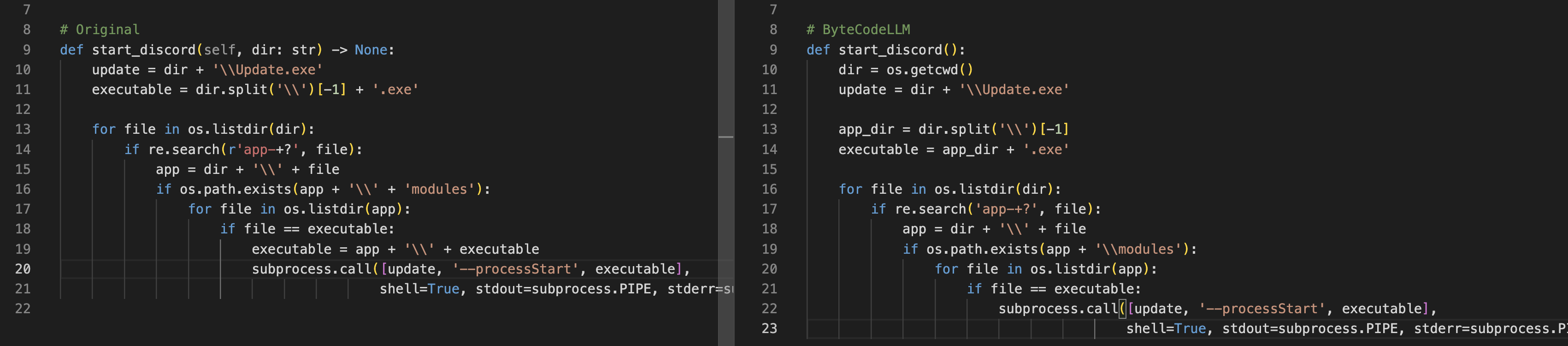

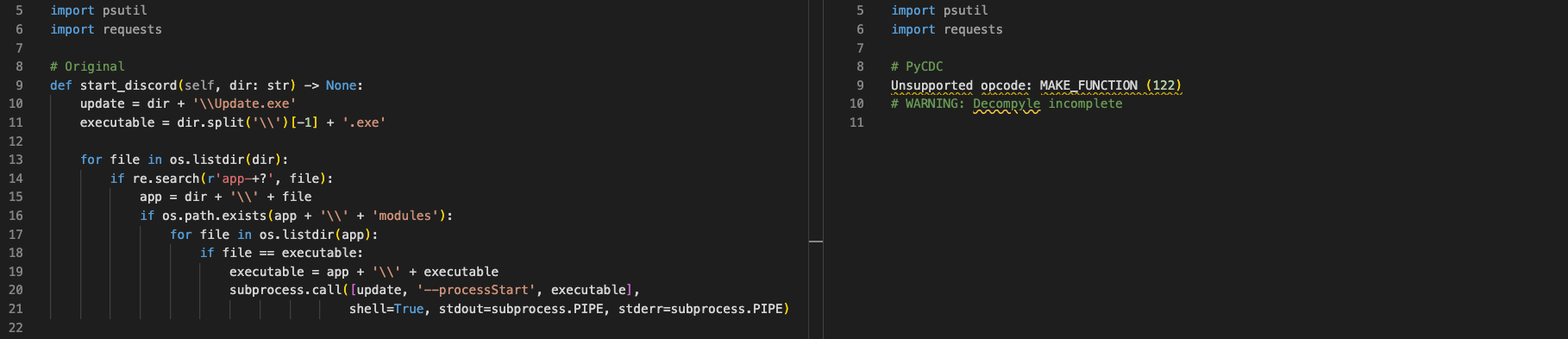

Let’s take, for example, the “start discord” function from the known open-source malware “Empyrean”. This function is called after injecting a malicious payload into Discord’s desktop app source code. Running Update.exe restarts the application covertly to ensure that the payload written on the hard drive is loaded into the application without waiting for a PC restart.

Figure 2: Original Source Code Compared to PyCDC Decompilation

Figure 3: Original Source Code Compared to ByteCodeLLM Decompilation

Figures 2 and 3 show a side-by-side comparison of the original malicious code to the output from pycdc (currently the most popular Python bytecode conversion tool) and ByteCodeLLM. Although ByteCodeLLM is not flawless, it generates comprehensible code without crashing — a notable improvement over existing solutions.

Other researchers have explored ideas, but we didn’t find comparable open-source tools are currently available.

The next section will describe how ByteCodeLLM works and utilizes LLMs to decompile Python bytecode to source code.

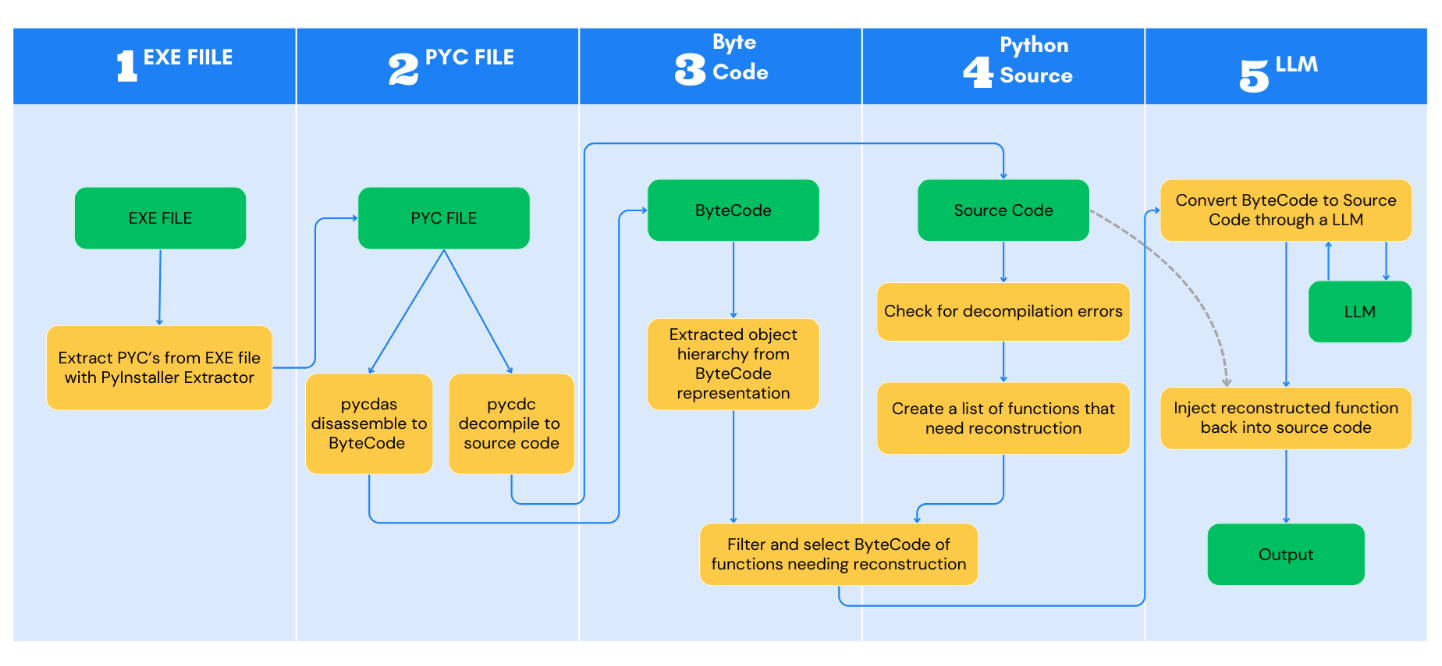

Figure 4: ByteCodeLLM Flow Graph

These are the following stages of bytecodellm:

1. EXE File:

- Extract PYCs from EXE: Tools like PyInstaller are used to package Python scripts into executable files. We can use pyinstxtractor-ng to reverse this process and retrieve the compiled Python files (PYC files) that contains all the logic. This step has not been implemented yet.

2. PYC File:

- pycdas disassemble to ByteCode: PYC files contain byte code, a lower-level representation of the Python source code. The Pycdas tool disassembles the PYC files into a human-readable byte code format.

- Extracted object hierarchy from ByteCode representation: This step involves analyzing the pycdas output to map the program’s structure and relationships between different code objects (functions, classes, etc.).

3. ByteCode:

- Extracted object hierarchy: By parsing the pycdas output, we can generate a hierarchy tree for all the code objects and classes inside the PYC file. This is used to split code into functions for bypassing context length limitations in LLMs and for optimization, which we cover in the following steps.

4. Python source:

- Check for decompilation errors: Scanning through the pycdc output, we find all the functions and classes that have not been reconstructed correctly.

- Select ByteCode of functions needing reconstruction: This step involves identifying the correlating byte code of functions we’ve identified in the previous; stage, and they are prioritized for LLM-assisted reconstruction.

5. Large Language Model:

- Convert ByteCode to source code through an LLM: The byte code we’ve identified in the previous stage, along with the initial decompiled output, is fed into an LLM. The LLM uses its knowledge of Python syntax and common coding patterns to generate more accurate and complete source code.

- Inject reconstructed function back into source code: The LLM-generated code for the failed-to-convert functions are integrated back into the overall decompiled source code.

- Output: Decompiled python code.

ByteCodeLLM represents a step forward in decompilation technology by harnessing the power of LLMs to overcome the limitations of traditional tools. This allows researchers to analyze and understand code more effectively, particularly in the context of malware analysis.

Optimizing breaking down the code into functions and then converting only the failed-to-convert functions through an LLM is done for two primary reasons. First, it cuts down the time needed, and second, most LLMs don’t have the context length to read through an entire file’s worth of byte code. By breaking down a file into functions, we circumvent this issue.

How did we choose the LLM to work with?

While Ollama and Huggingface present a wide range of local and open LLM models to choose from, we didn’t find any LLM model that declares the ability to decompile Python bytecode to source. As a result, we needed to create one or find one that would work for us.

We have selected several leading candidates from Hugging Face’s leaderboard that claim for Python code generation capability. We then developed a dedicated test to check their accuracy of decompilation over a , by checking the similarity between the original Python snippet against the generated one.

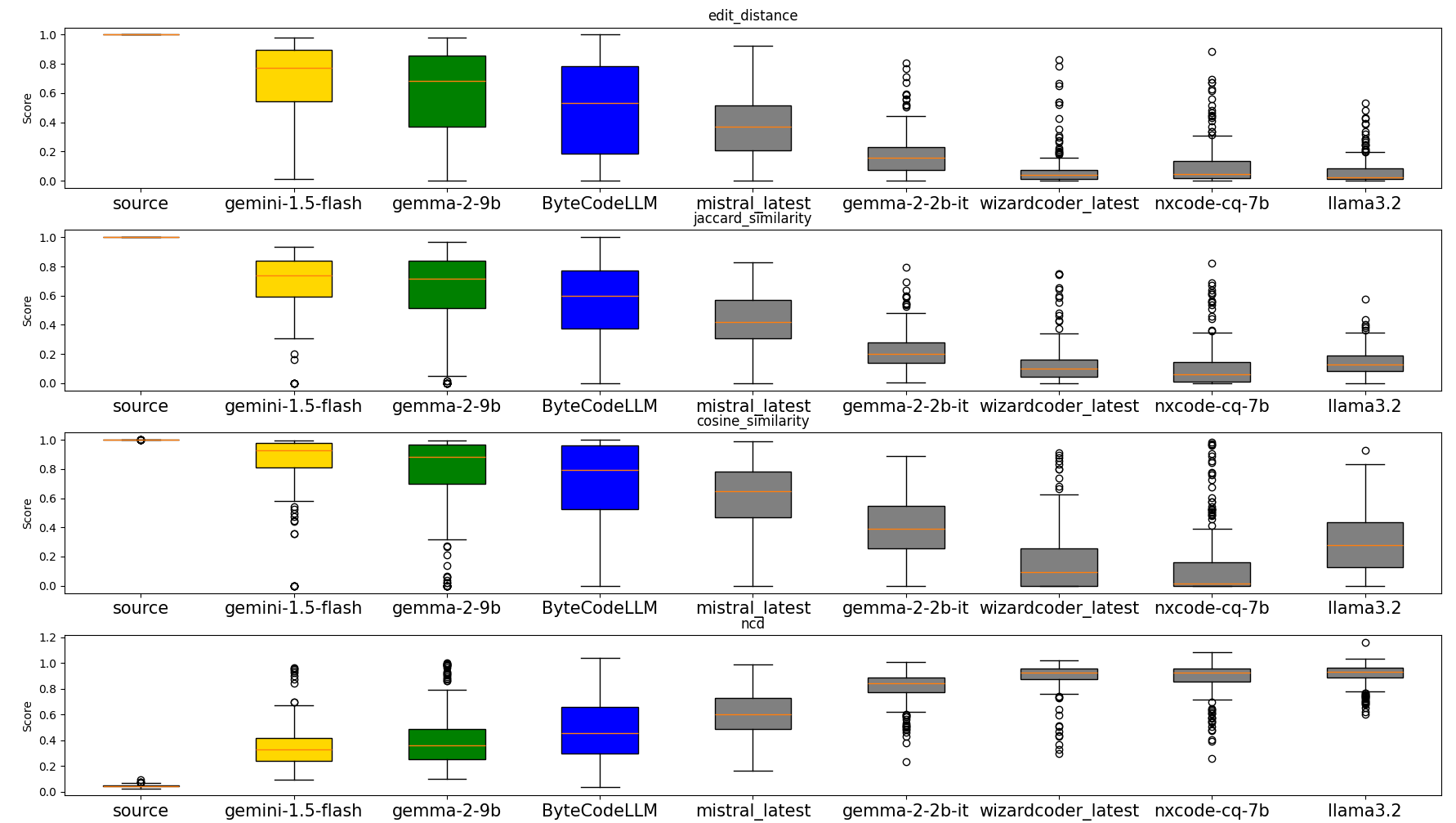

Figure 5 shows the results of four similarity tests for some of the LLMs we tested. The similarity is a score of how well the LLM reconstructed the byte code compared to the corresponding source code from our database. Note. Take note of the results from Gemini 1.5-flash (Gold) versus ByteCodeLLM (Blue) which is our own finetuned model based on the much smaller llama 3.2 3b

Figure 5: Similarity Tests Between Different LLM Decompilation Results and the Original Source Code

We have decided on four different code similarity metrics to measure the accuracy of the models:

- Edit Distance: Counts changes to match code.

- Jaccard Similarity: Measures overlap of code tokens.

- Cosine Similarity: Calculates the angle between code embeddings.

- NCD (Normalized Compression Distance): Estimates similarity via compression.

Note: The NCD metric is inverted—lower scores indicate higher similarity.

Our initial evaluation showed Gemini-1.5-flash to be the top performer. However, due to its commercial nature, it fell outside our selection criteria. Gemma 2, with 9 billion parameters, demonstrated superior performance compared to all other models we tested, including our own ByteCodeLLM.

Based on these findings, we’ve discontinued the development of the internal model for this use case and currently suggest Gemma 2 as the default model. We encourage the community to share any promising new models for this application.

Improvement over current tools

To evaluate the effectiveness of our enhancements over current tools, we developed a comprehensive sample set that contains a wide range of Python language features, including specific malicious code patterns that malware researchers frequently encounter.

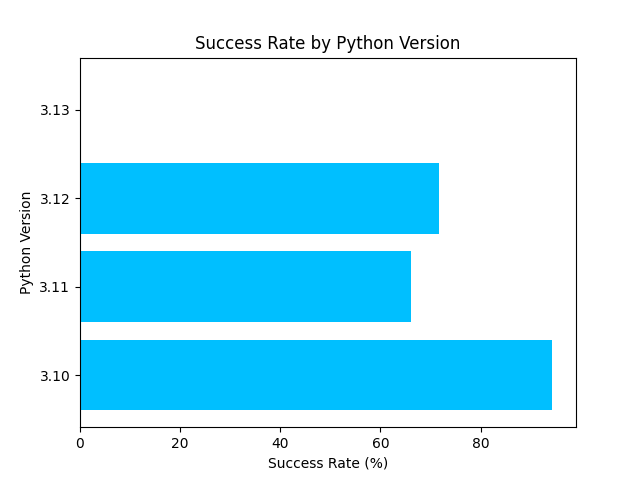

These code snippets were compiled across all major new Python versions, starting from 3.10 up to the latest 3.13 release. We then compared the output from both pycdc and our project to assess performance and accuracy. Figure 6 provides a visual representation of the pycdc success rate per Python version, which is a measure of the percentage of functions without decompilation errors.

Figure 6: Pycdc Success Rate per Python Version Over a 200+ Sample Set

Figure 6 reveals several key insights. First, we observe a declining success rate as the Python versions progress, ending in a complete inability to decompile Python 3.13 bytecode. This failure stems from Python’s changes in function definition (as illustrated in Figure 7), which fundamentally breaks pycdc.

Secondly, our dataset of over 200 code snippets is noticeably skewed toward shorter functions. A manual review of pycdc’s performance indicates a clear trend: the longer the function, the higher the likelihood of decompilation failure. This correlation exists due to the nature of longer functions usually containing complex logic — a logic that is translated to byte codes that are still being optimized and tinkered with. Thus, they often change from version to version as the Python language evolves and improves over time.

Figure 7: Results of Python 3.13 PYCDC Decompilation

ByteCodeLLM, on the other hand, has been able to reconstruct every function and class in the sample set, with a small caveat that for Python 3.13, the project uses a fallback to an unoptimized converter algorithm, as pycdc fails to output a partial decompilation that allows us to optimize runtime, making the process much longer. Nonetheless, it saves us time as we don’t need to analyze the byte code manually.

Summary:

Keeping pace with the rapid evolution of Python — especially in the realm of malware analysis — demands innovative solutions. We believe ByteCodeLLM can be a crucial tool for security researchers, effectively tackling the challenge of decompiling Python executables. By harnessing the power of local LLMs, ByteCodeLLM offers improved accuracy and ensures data privacy. Notably, it seems to be the only locally hosted tool currently capable of decompiling Python 3.13 and provides a significant advantage in analyzing and understanding the latest malware threats.

Privacy recommendations:

- Use Local LLMs: Consider using local LLMs to process sensitive data and maintain privacy.

- Minimize sensitive data sharing: Avoid inputting highly sensitive or confidential information (PII) into LLMs.

- Anonymize data: When possible, anonymize or de-identify data before sharing it with an LLM.

- Understand provider policies: Carefully review the privacy policies of LLM providers to understand how your data may be used.

- Stay informed: Keep abreast of the latest research and security best practices regarding LLMs.

Amir Landau is a malware research team leader and David El is a malware researcher at CyberArk Labs.