As AI systems are used in our day-to-day operations, a central reality becomes unavoidable: AI doesn’t configure itself and must be set up with human approval and oversight. It requires engineers and developers to configure it. Developers need privileges to access and implement components, agents, tools, and features of the platforms.

But developers don’t just have these privileges unconstrained… right?

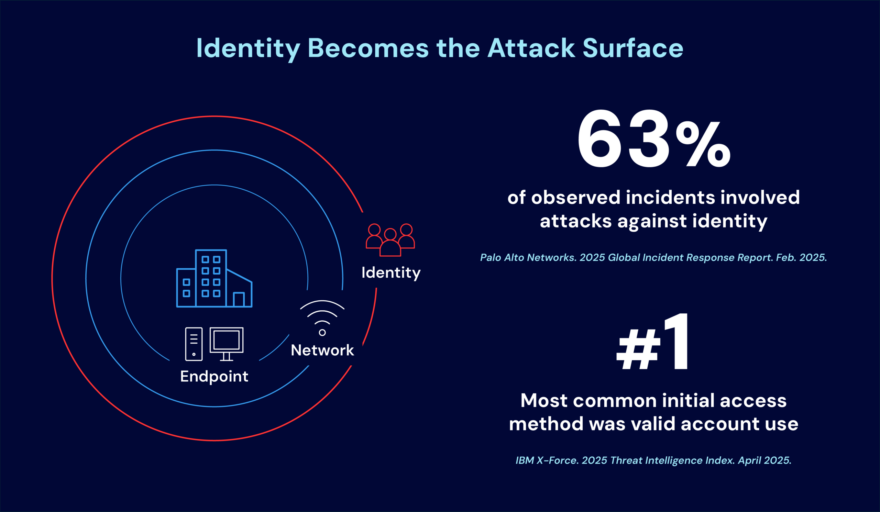

Where trust and privileges exist, someone will try to abuse them. The real risk in AI environments is not only the model, but also the identities and privileges used to configure and operate it.

In this blog, we’ll anchor the discussion to a single example platform: AWS Bedrock, with Anthropic’s Claude as the underlying large language model (LLM). This isn’t to suggest other platforms are ignored—similar identity, access, and control flows apply across Azure and GCP. Azure OpenAI Service, Azure AI Foundry model endpoints, and Google Cloud Vertex AI all require comparable role-scoping, secret-handling, and audit controls. We’ll use AWS here purely as a concrete reference point.

AI implementations are susceptible to rogue patterns, excessive exposure, and data sovereignty issues. As organizations build and deploy AI agents, we need to establish secure-by-design principles before the build and rollout, not after systems are already in use. We also need to audit and monitor interactions to verify that workflows act as expected, and to enable a response if they don’t.

This research is based on publicly available information. It was conducted for defensive and educational purposes. CyberArk shares this work to support responsible security practices and improve the community’s understanding of real-world threats.

Returning to the core dynamics of AI risk, the next layer focuses on how access design and privilege shape system behavior.

Where AI design meets identity and privilege risk

Keeping pace with AI’s evolution is essential, but understanding how access is granted and used is what ultimately determines risk.

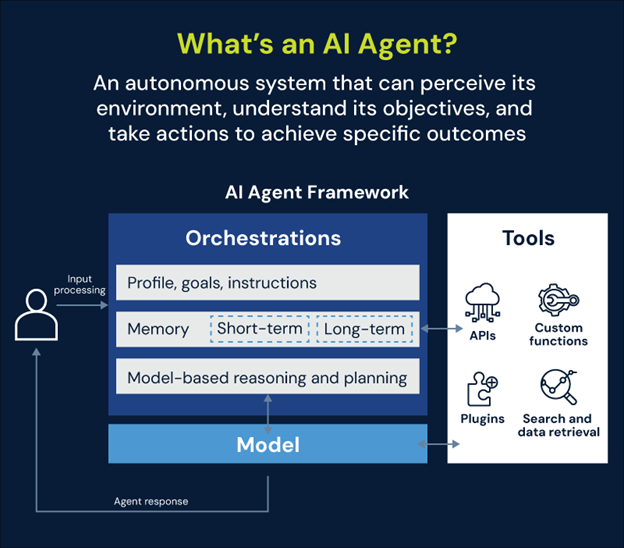

Our privately owned agents are configurable and implemented by teams such as product development, cloud infrastructure, and LLMOps. To understand why identity and privilege sit at the center of AI risk, it helps to surface a couple of foundational questions:

1. What if a developer’s workstation or development server were attacked?

2. What is the broader impact of a system compromise?

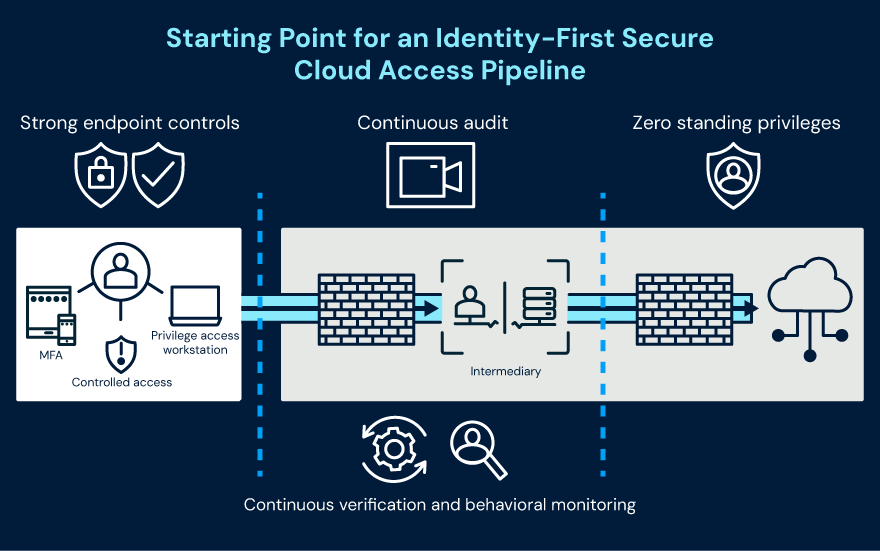

The developer endpoint is part of an overall collection of systems and attributes that define session trust. This is an underlying principle of the privileged access pipeline. The illustration below visualizes access from source to junction to terminus and can be populated with controls essential to defend workflows. It provides a single thread and template for specific scenarios and use cases, and helps identify clear ways to isolate systems during an active attack.

When an attacker gains access to an endpoint, there is a high risk of a familiar attack chain: lateral movement, data exfiltration, loss of trust, and an absolute headache for developers, their managers, and the CISO. In some cases, this has led to CEOs testifying before Congress, as in the SolarWinds case.

How familiar attack patterns evolve in AI

It isn’t a surprise that AI is also used by criminals to enhance their capabilities, including AI-driven attack frameworks and stealthy, evolving malware built with agent prompts to evade detection or analysis. Humans might spot these inputs, but automated systems may process and act on them at scale, sometimes acting with excessive agency or missing the attack entirely.

Most attacks described in the OWASP Top 10 for LLM Applications follow patterns security teams already understand. These risks aren’t hypothetical. They reflect attack patterns security teams have seen for years, now simply expressed through an AI lens:

- Gain access to protected systems

- Modify configurations or workflows

- Perform actions the organization never intended

When AI is involved, the names may change, but the pattern feels the same. Somewhere along the way, attackers will abuse privileges, whether they belong to a user, an account, or an agent.

One example is Unbounded Consumption—an OWASP Top 10 LLM risk—where an attacker gains access to an LLM and uses it to consume compute resources at scale. Beyond runaway costs, this can degrade service availability for legitimate users and, over time, enable model theft or scraping as attackers repeatedly probe the system to extract behavior, outputs, or proprietary logic.

A closer look at how LLM access becomes misuse

An attacker gains access to a developer workstation and retrieves cloud configuration files and session tokens. Using the associated role, they invoke Bedrock APIs directly. The agent has access to data stored in services such as Amazon S3 and backend databases, and those permissions are now abused.

The attacker adds malicious files to poison retrieval paths and resells access to another group that runs the model at scale. Cloud spend spikes into a Denial of Wallet, distracting teams and enabling data exfiltration amid the noise of legitimate-looking usage.

This is not a model failure. It is an identity and privilege failure.

Defense-in-depth still applies. Stolen cloud configuration files and session tokens remain one of the most common entry points into cloud environments. Our LLMs are now another target for attackers, with new ways to amplify impact.

This scenario reflects multiple OWASP Top 10 LLM risks, including excessive agency, data poisoning, vector and embedding weaknesses, and unbounded consumption driven by weak identity controls and over-privileged access.

Given how identity and privilege failures drive these AI‑enabled attack paths, organizations can materially reduce exposure by anchoring on a core set of proven controls:

- Treat agents as identities, not features

- Enforce least privilege with just-in-time (JIT) access to cloud roles and services

- Remove standing access across cloud and infrastructure environments

- Protect endpoints used for privileged and administrative activity, including machine identities used by model context protocols (MCPs)

- Monitor usage continuously across identities, sessions, and workloads, and act on alerts

These controls are not only security measures; they directly protect service stability and financial operations by keeping agents aligned with governance, risk, and compliance (GRC).

Technical controls are essential, but they’re only part of the equation. Organizations also need operational readiness to recognize privilege misuse early and respond before small gaps become major incidents.

Strengthening defenses before AI and LLM risks escalate

Attacks have and continue to evolve. Proactive identity management—and rapid response to misuse—act as force multipliers for operations. This reflects how identity, privilege, and AI operations intersect in real environments. Identifying gaps before attackers is essential.

These themes echo what we continue to see across cloud and hybrid environments more broadly.

Findings like these reinforce the broader lessons captured in CyberArk’s “Beat the Breach” research, where real‑world incidents consistently show how a single over‑privileged or compromised identity can escalate to system‑wide impact. Insights from practitioners and incident responders—including the CyberArk SHIELD Team, our incident response and research group—reveal the same pattern in AI environments: when access design, privilege boundaries, and operational readiness align, organizations are far better positioned to detect misuse early and contain it before it becomes a major incident.

For a deeper look at these trends and the identity‑first strategies that strengthen resilience across cloud and hybrid environments, check out our “Beat the Breach: Crack the Code on Effective Cloud Security” white paper.

Aaron Fletcher is a lead incident response architect and consultant.