Welcome to the 2025 Identity Security Landscape rollout—and to the “it’s complicated” phase of our relationship with AI. Each year, CyberArk surveys security leaders across the globe to understand their top identity security concerns. This year, AI delivered the trifecta: attack weapon, defense tool and risk multiplier.

Attackers are using AI to deploy phishing emails and AI-driven deepfakes that can dupe even the savviest users. On the other hand, organizations embracing AI and large language models (LLMs) must now strike a balance between the rewards of innovation and an increasingly complex and exposed attack surface. AI’s dependence on extensive data not only heightens the risk of unauthorized access but also makes the AI models susceptible to manipulation.

But AI is only one piece of a much larger puzzle. Privilege sprawl is exploding: over the next 12 months, 59% of respondents predict that machine identities (from cloud workloads to app credentials and automated services) will be a leading driver behind identity growth—outpacing even AI and LLMs. At the same time, overburdened security teams report shrinking visibility across their cloud environments.

The geopolitical response to this emerging identity threat landscape is best described as “Jekyll and Hyde.” The U.S. is signaling a more hands-off approach to AI oversight while state-sponsored cyber actors behave increasingly like organized crime syndicates. One notable example of this growing threat is the recent infiltration of the U.S. Treasury by Chinese state-sponsored attackers, which is regarded as a major cybersecurity incident. At the same time, the European Union is mandating rigorous standards for AI model documentation and monitoring, with substantial fines for non-compliance. Australia passed its first AI-specific cybersecurity law, tightening identity controls and codifying how AI systems are secured and governed.

If your thousand-yard stare isn’t locked in yet, give it time. This broad, systemic shift will require careful planning. To help you navigate these changes, let’s break down the top identity security risks you need to know.

AI: Defender, Attacker and Risky New Hire

AI-powered efficiency is a game-changer: 94% of respondents use AI to enhance their identity security strategies, and 72% of employees regularly use AI tools on the job. But, as organizations scale their AI use internally, they also scale risk. The models themselves require large volumes of data, spawn new machine identities and introduce a host of challenges that most legacy controls aren’t designed to handle.

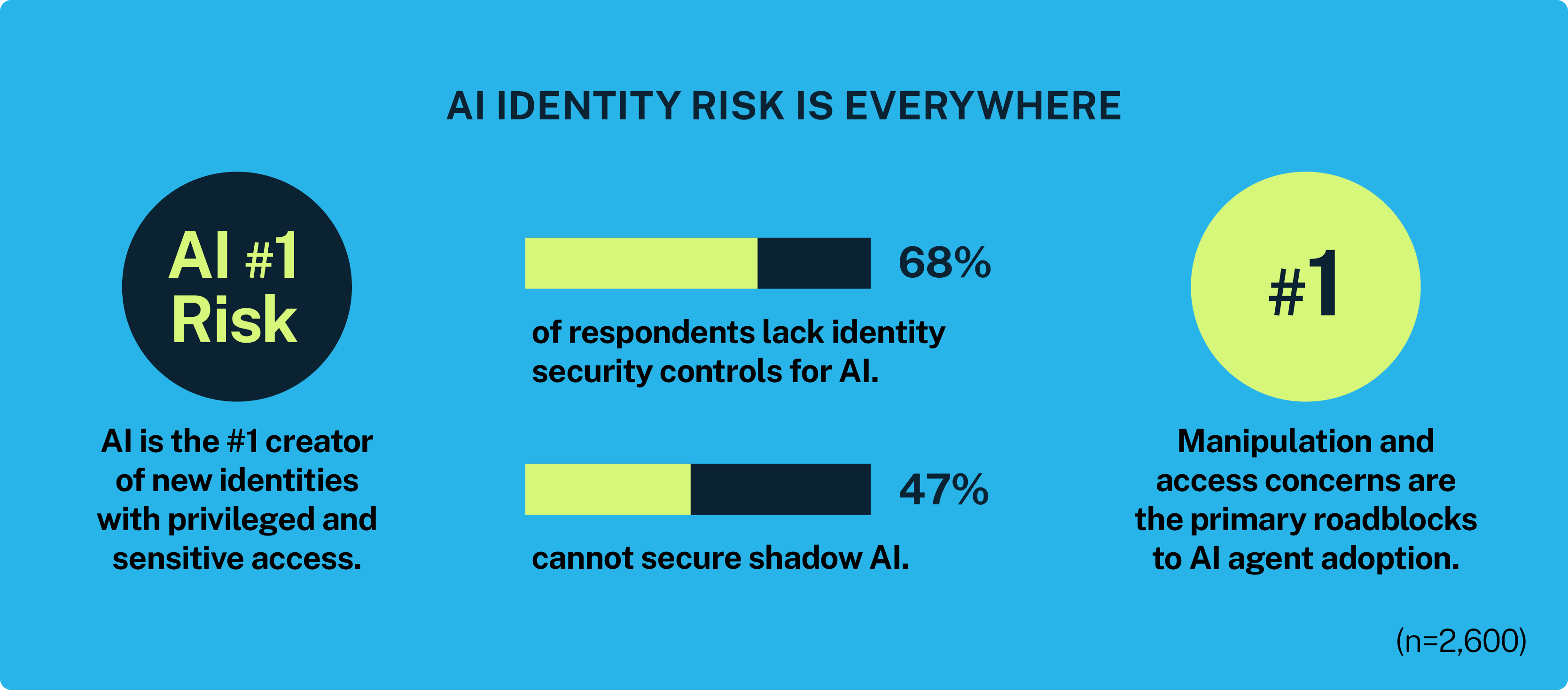

AI and LLMs are projected to be the top creators of new identities with privileged and sensitive access in 2025. In fact, while organizations cite AI as the top creator of new identities with privileged access in 2025, 68% of respondents lack the identity security controls needed to manage these technologies safely.

Then there’s the problem of “Shadow AI”: unapproved, unknown AI tools that who knows how many employees are using (and potentially feeding them sensitive company data). Nearly half (47%) of respondents say they can’t fully track this growing problem.

Attackers aren’t just weaponizing AI to automate phishing, bypass controls and mimic users with growing accuracy—they’re also finding new ways to corrupt AI models into “jailbreaking,” or secretly extracting and sending users’ personal information and payment details.

CyberArk Labs has been working relentlessly to stay ahead of these emerging attacks. In December 2024, it launched FuzzyAI, a revolutionary tool that has successfully jailbroken every AI model it’s tested. This open-source project, now available on GitHub, can help organizations and researchers systematically identify and fix AI security gaps before attackers exploit them.

The AI Agent Dilemma

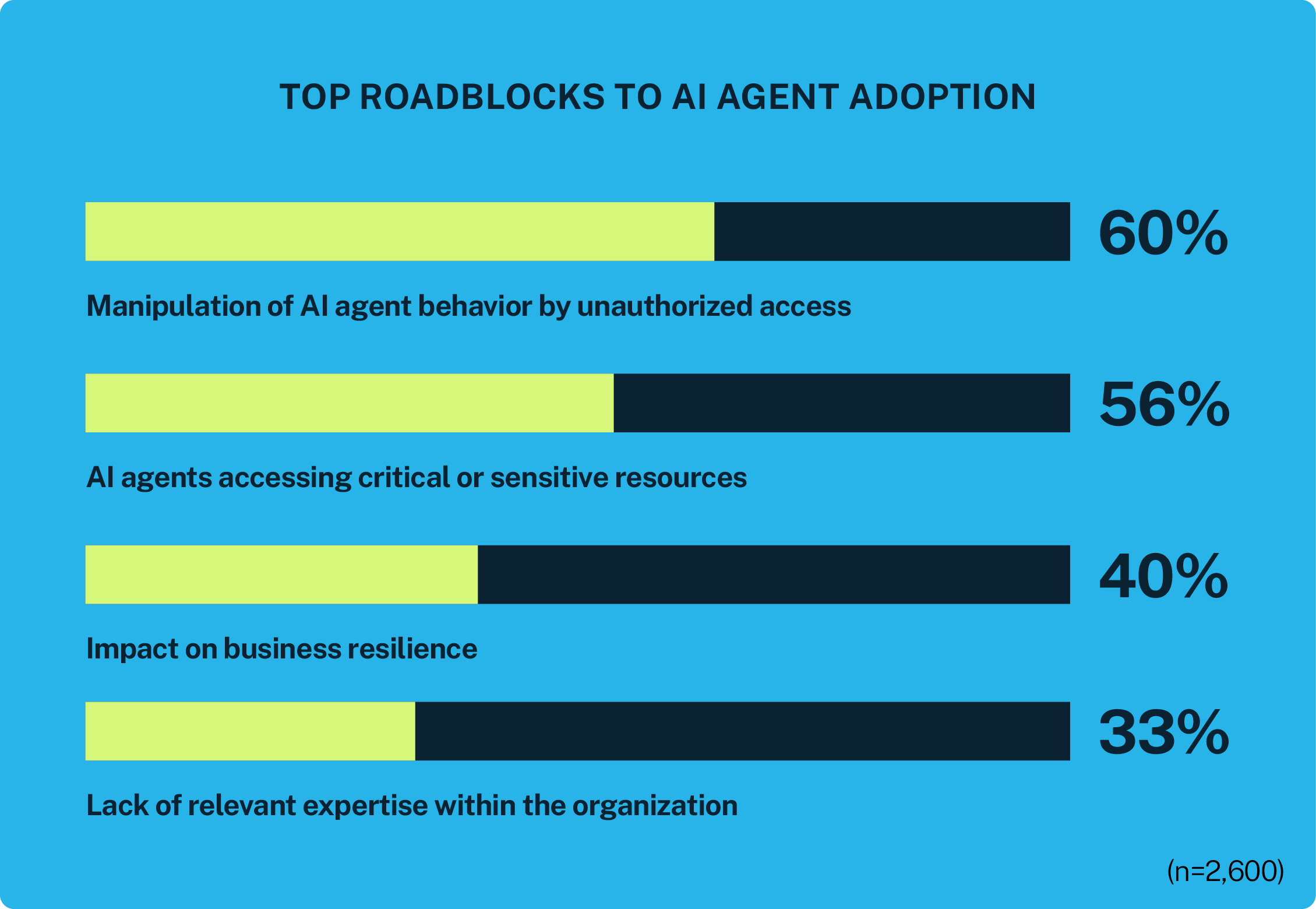

Imagine if intelligent, autonomous entities could alleviate 15% of your daily work. That’s the level of decision-making experts expect AI agents to reach by 2028. Unlike static machine identities, AI agents can perceive, reason and act on defined goals. While this presents a powerful opportunity for organizations, our report finds that serious concerns about sensitive access and manipulation remain the biggest roadblock to AI agent adoption.

At the model layer, AI agents can be “tricked” into executing commands, leaking data or granting access without human oversight. Most identity and access management (IAM) systems aren’t built to manage the authentication, privileged access and lifecycle controls for potentially thousands (or millions) of these self-operating entities.

To prepare, organizations need strong privileged access controls, continuous governance and clear frameworks that align AI behavior with security policy to ensure “scale” doesn’t become “sprawl.”

To Secure AI agents, We Must Secure Identity

AI is now a core part of modern business, but safe adoption depends on security teams taking a proactive, three-tiered approach:

1. Secure development: Ensure clean training data and strong coding practices.

2. Secure deployment: Protect AI systems in production with robust identity controls.

3. Secure use: Integrate AI into identity security strategies from the start, not as an add-on.

Machines that behave like humans require both human and machine identity security controls. Without these dual-layered protections in place, we risk repeating the identity chaos of early robotic process automation (RPA) implementations, where impersonation, over-privileged access and lack of governance left the door wide open to attacker exploitation.

The Unchecked Surge of Machine Identities

As AI adoption rises, so does the number of machine identities quietly running the show. These identities authenticate workloads, automate tasks and power modern ecosystems—yet they often remain invisible to traditional security tools. And now, there are a lot of them.

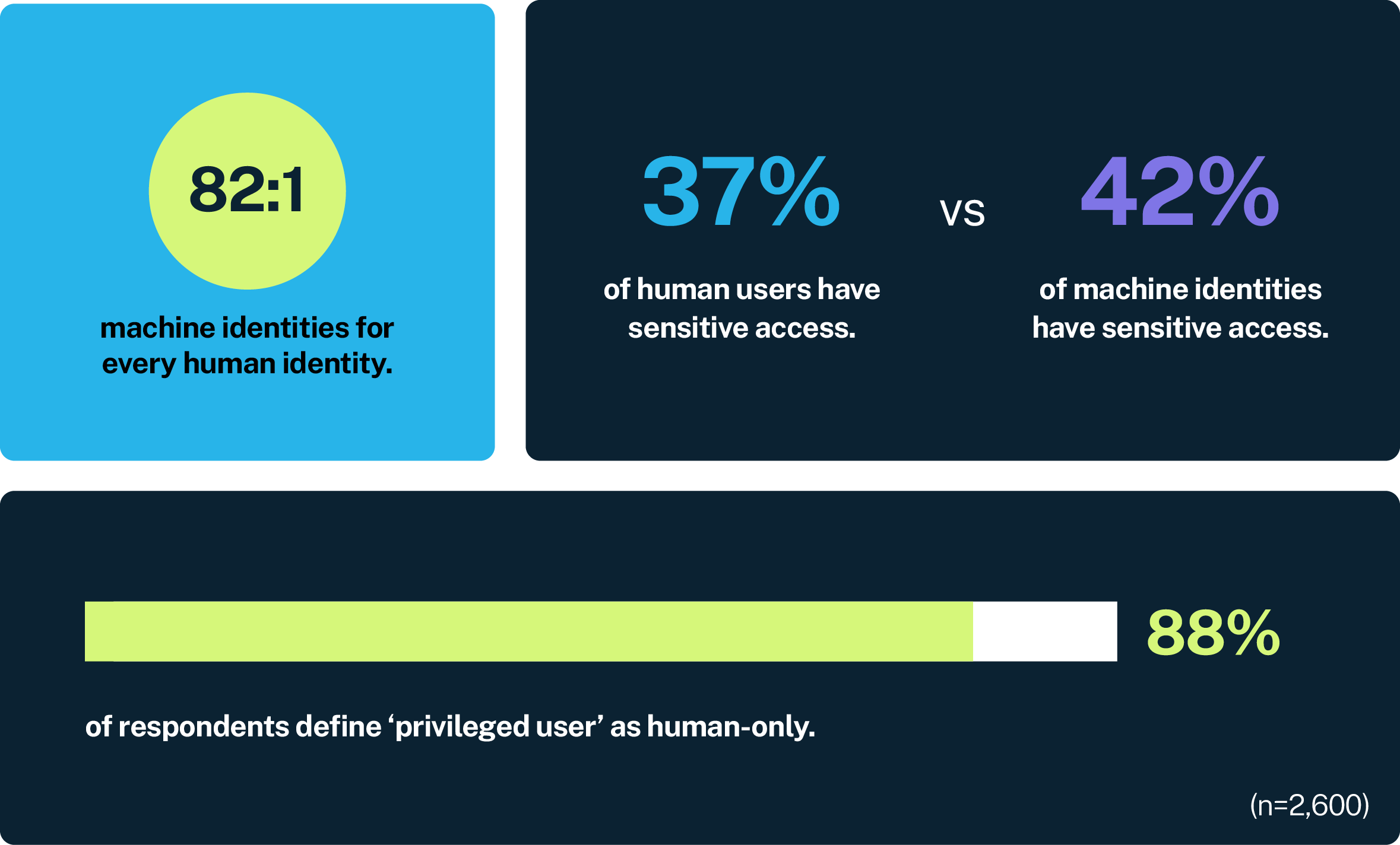

Underscoring this reality, our report finds that machine identities outnumber human identities by more than 80:1, nearly double the ratio we saw just three years ago. This ratio climbs to 96:1 in the finance sector and 100:1 in the U.K.

And they’re not just abundant—they’re privileged. Machine identities emerged as this year’s top perceived identity risk in terms of the most unmanaged, unknown identities across the IT environment.

Yet despite the numbers, 88% of respondents still define “privileged users” as human-only. That’s a dangerous oversight, especially when 42% of machine identities have sensitive access (as opposed to just 37% of humans). These untracked, unmanaged machine identities represent a growing portion of the modern attack surface.

Organizations Need a Unified Identity Security Strategy for Every Identity

Enterprises need to evolve past security models that focus solely on human users and redefine “privileged user” to include machine identities. Other critical safeguards include:

- Monitoring both user and admin sessions

- Safeguarding secrets in cloud environments

- Automating certificate lifecycles and enforcing role-based, just-in-time (JIT) access controls

Identity Silos: Cracks in Your Security Foundation

As many organizations scaled, merged or modernized, identity controls were often assembled in fragments—bolted onto whatever systems were already in place. Different teams chose different tools, often tasked with solving the same problem in slightly incompatible ways. The result is often a patchwork of overlapping technologies and inconsistent policies.

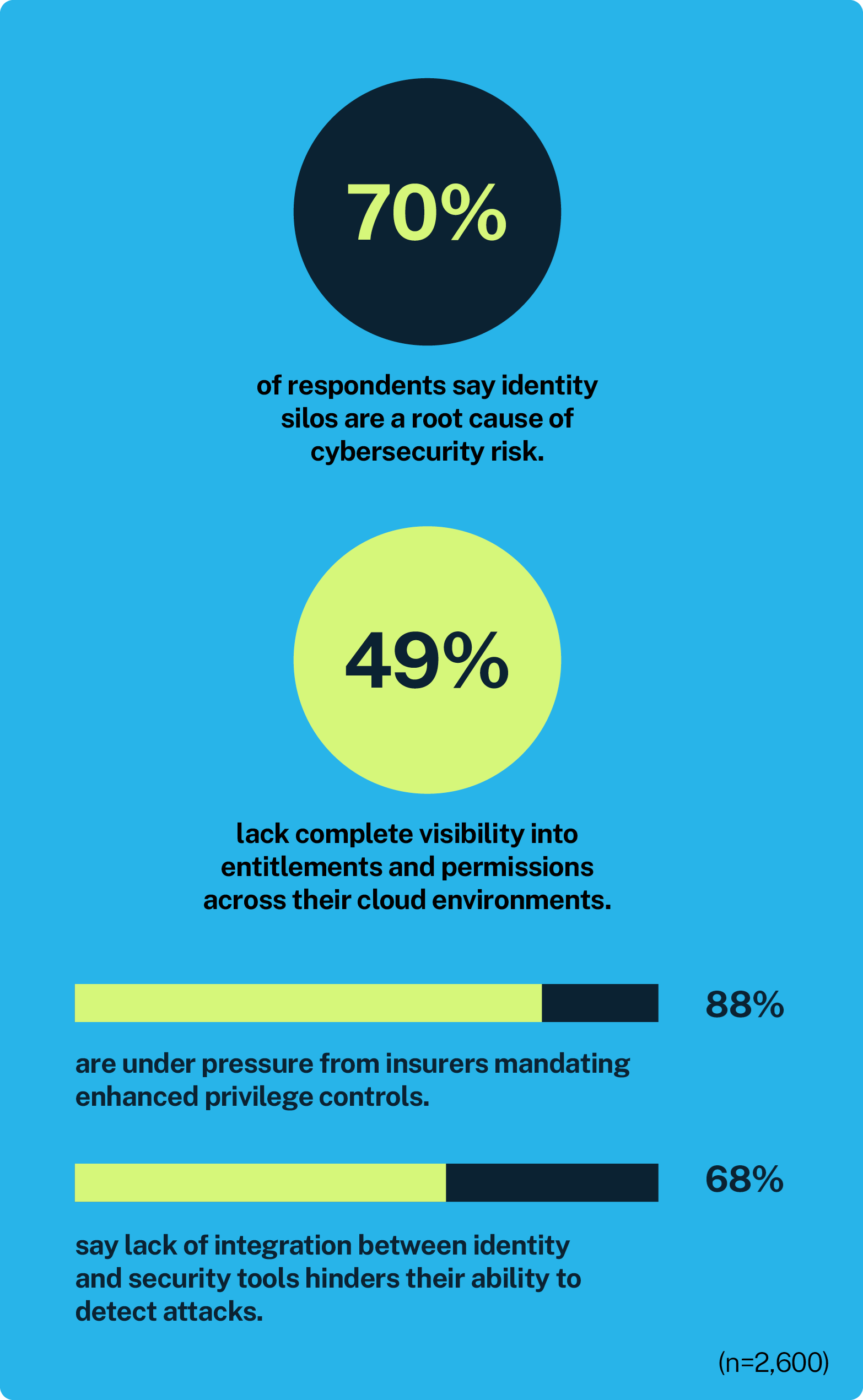

While 94% of respondents say they’ve deployed tools that automatically protect and monitor cloud sessions, nearly half (49%) still can’t confidently track who has access to what. It’s no surprise, then, that 70% of security leaders now point to identity silos as a key source of organizational risk.

Integration remains a major sticking point, with 68% of respondents saying the disconnect between identity and security systems slows threat detection. The consequences aren’t just operational. Silos complicate compliance and drive up cyber insurance premiums: 88% report increased demands around privilege controls, and 89% say insurers are now enforcing least privilege more aggressively.

The Critical Role of IGA in 2025

Our report finds that identity governance and administration (IGA) was reported as a top strategic identity security investment for 2025. We concur: IGA plays a critical role by centralizing and automating identity management across on-prem, cloud and hybrid environments and helps tackle fragmented legacy systems head-on.

Paired with privileged access management (PAM), IGA ensures consistent policies, supports least privilege access and aligns with Zero Trust principles. IGA also reduces manual effort, cuts latency and helps organizations meet regulatory demands—without burning out security teams in the process.

The Path Forward: Secure Every Identity, Everywhere

While AI continues to shift the threat landscape, strong identity security remains the most effective way to contain it.

At the core of nearly every attack is identity. Regardless of an attacker’s tactic, their endgame is the same: gain control of an identity. Organizations should adopt a build-to-protect mindset, where every identity and resource is guarded by automation and intelligent privilege controls from the moment it is created. Ultimately, securing every human and machine identity with an integrated, end-to-end strategy is the best way to cut through the complexity. Identity must shape how organizations prioritize their defenses with AI—this year, and well into the future.

Read the CyberArk 2025 Identity Security Landscape.

Clarence Hinton is chief strategy officer and head of corporate development at CyberArk.