We hear about it all the time – data breaches that expose a company’s sensitive information. Nearly all of us have been warned that our passwords, email addresses or even credit cards have “potentially” been exposed to cyber attackers. So why is this such a common occurrence? While there are several factors, in this post, we’ll focus on the misconfiguration of cloud services – specifically their storage services. It’s true that files that are uploaded to cloud storages are private by default, but unfortunately, we discovered that many organizations are likely inadvertently opening their files to the public access.

For this research, I looked at Microsoft Azure (but the concepts presented here are relevant to most all other cloud providers) and found millions of files that were stored online without any access restrictions – meaning they would be generally available to anyone looking for them. Many of these files included sensitive information from personal identifiable information like ID’s and email addresses to credit card numbers, invoices, financial information and more.

After I’ll introduce my research deeply, I’ll also introduce a new tool I wrote called BlobHunter – which will help organizations mitigate this exposure.

It’s important to note that this research did not uncover a vulnerability within Microsoft Azure, but rather focuses on end-user misconfiguration issues. It’s the responsibility of each organization to configure the files’ access permissions correctly.

Introduction

Cloud storage services like Microsoft Azure Blob are networks of remote servers that are accessed over the Internet and house all the data that is kept in a cloud environment. Since the cloud service providers own the physical servers – taking the burden off their customers – they are also responsible for keeping the physical environment protected and running efficiently (i.e. allowing customers to always have their data available and accessible).

While the benefits of cloud storage are undeniable (i.e. reducing operating costs, heightened efficiency and scalability) there is one question we must ask ourselves, is it actually safe to keep our data in the cloud?

Spoiler – it absolutely can be – if the end-user organization does it in the right way, but, unfortunately in our research we discovered many cases where organizations made misconfiguration mistakes that put their data at high risk.

The Risky Side of Microsoft Azure Blob Storage

Blob storage is specifically designed to store massive amounts of unstructured data or data that doesn’t adhere to a particular data model or definition, like text or binary data. Here are some of the use cases where Blob storage shines:

- Storing files for distributed access

- Storing data for backup and restore, disaster recovery, and archiving

- Writing to log files

So while Blob storage is optimal to store text, binary files and images – these files can often include sensitive data.

We will soon see that when we store some data in the cloud with the Blob storage service, we must define its access level too. It is our responsibility, as users, to choose the access level, and ours only. It is important to note that by default the files we upload to the cloud are set to be private, but many organizations change it over time to match their needs.

For example, looking at a bank, you can assume that they are saving customers’ credit card data as well as personally identifiable data including names, addresses and phone numbers. This information could be kept in CSV files, or some other textual formats.

You might think that most banks would rather save sensitive files on-premises on the bank servers, but many also use cloud data storage for its numerous benefits.

That concept of mixing personal data servers with cloud data servers is called “Hybrid” cloud storage and we see it across businesses and industries – but is the access to these sensitive files configured correctly?

Sensitive data that is stored in the cloud without the proper access level configuration can lead to a disaster for a company – and a big pay day for an attacker.

Just imagine what would happen if a malicious attacker was able to grab your banking information – not just your account number, but also your name and address to go with it. What damage could they do?

It is important to note that there are scenarios when you would want your cloud-stored files to be publicly open for everyone over the internet like website images, for example, but there are many more cases when you wouldn’t.

Before we dive into just how we were able to access these poorly configured sensitive blobs, we must understand some technical details about Azure Blob storage structure.

Understanding Azure’s Blob Storage Structure

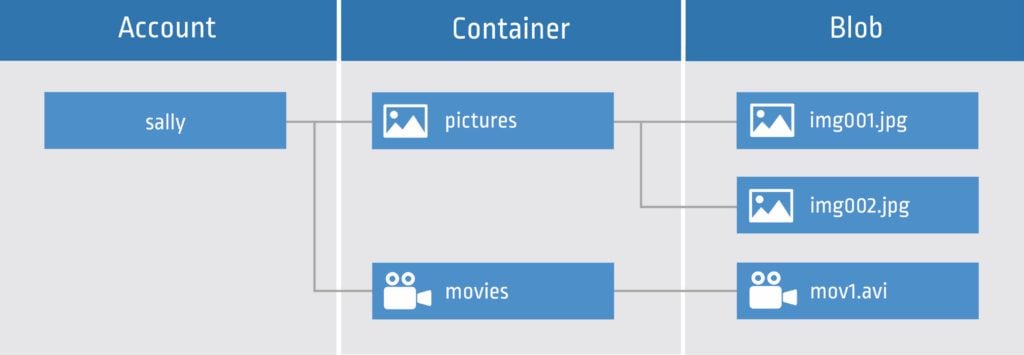

According to Azure documentation, Blob storage offers three types of resources:

A storage account which provides a unique namespace in Azure for your data.

A container in the storage account, which organizes a set of blobs, similar to a directory in a file system.

Every container is associated with public access level.

This access level can either be:

1. Private – no public read access. The container and its blobs can be accessed only with an authorized request. This option is the default for all new containers.

2. Blob – Public read access for blobs only. Blobs within the container can be read by anonymous request, but container data is not available anonymously. Anonymous clients cannot enumerate the blobs within the container.

3. Container – Public read access for container and its blobs. Container and blob data can be read by anonymous request, except for container permission settings and container metadata. Clients can enumerate blobs within the container by anonymous request but cannot enumerate containers within the storage account.

Some options that Azure Blob offers for authorizing access to resources are Shared Key (storage account key) and Shared access signature (SAS).

The last type of resource that the Azure Blob storage offers other than the storage account and a container, is of course, a blob, which resides in a container.

There are three types of Blobs:

- Block

- Append

- Page

You can specify the blob type when you create the blob initially. Block blobs are the ones that store text and binary data – and the one we’ll focus on.

Figure 1: The relationship between storage account, containers, and blobs – link.

In simple words, a storage account is like an account name, and a container is like a directory that contains files.

We can access our stored files via a URL – all with the next pattern:

http://<storage-account>.blob.core.windows.net/<container-name>/<file-name>

For example, suppose our storage account is named mystorageaccount and we have a container called mycontainer with a file called myfile inside that container – the access URL would be:

http://mystorageaccount.blob.core.windows.net/mycontainer/myfile

Hunting Publicly Open Blobs

Knowing the importance of properly permissioned files, we wanted to investigate the volume of sensitive information that is publicly available on Azure’s cloud.

Spoiler alert: we found millions of files – many containing sensitive information including individuals’ medical records and personal data, companies’ contracts, signatures, invoices, and much more. In one case, we contacted an American electronics repairing company that had invoices and pictures of their customers’ products including personal details and UID’s publicly opened and helped them change the access level of those files to private.

So how did we get there? We built an automated tool that scans Microsoft Azure cloud for publicly open sensitive files stored within the Blob storage service. The tool’s core logic is built on the understanding of the 3 “variables” in the Blob storage URL – storage account, container name and file name. If we knew what the publicly opened sensitive files storage account, container and filenames are, we could access every single publicly open file, using the URL pattern established. But since we don’t know what these variables are for the sensitive files (or any other files actually), we needed to think of a way that would help us find them efficiently. We could try to guess all the three variables, but the success of guessing them all correctly is extremely low.

So, we decided to separate our problem into different parts, first finding storage accounts, then finding containers inside these storage accounts, and later on finding the sensitive files.

Finding Storage Accounts

First, we attempted to find Azure storage accounts using the Blob storage URL pattern – knowing that every resource endpoint is:

<storage-account>.blob.core.windows.net

which only has one variable and the hardcoded string “.blob.core.windows.net”.

We took some educated guesses about what the storage account names could be, and for every name option, we sent a DNS Resolver query to a DNS server.

We checked the answer packet from the DNS server to see if there was an IP address that matched our URL, and if we did get a valid address, we knew that the storage account existed.

We scanned about 200 million storage accounts (about 1 million per day) and found about 100k valid ones.

It is important to note that the number of accounts scanned per day depends on the machine hardware and network connection the search engine is running on. If we put our search engine on a stronger machine or even on many machines simultaneously, we would get much bigger numbers.

Finding Containers

After we found some storage accounts, we wanted to find any publicly opened files stored within them. Guessing container names and files is a futile effort, so we took the following approach:

First, we made a logical list of possible container names.

For every storage accounts we found earlier, and for every container name from our list, we sent the “List Blobs” HTTP request to the storage account endpoint.

As written in the Azure Blob service REST API documentation, the “List Blobs” operation returns a list of the blobs under the specified container.

So, we asked the endpoint to list all the files inside our given container. But why should it trust us and send us this information?

Well, as we now know only containers with the ‘Public read access for container and its blobs access’ access level will allow this kind of request for an unauthorized client. (This is documented in Azure List Blobs page.)

So, we searched for public containers in the valid storage accounts we found earlier and for every container option from our list, we requested a list of the files inside that container. As you probably understand, only containers that exists and have the ‘Public read access for container and its blobs access’ access level would return a valid answer for our request.

We scanned about 730 billion containers and discovered thousands of open public containers.

Finding the Sensitive Files

Now that we had the files list, we temporarily downloaded them and performed our logic to check if the file is a sensitive one. There are many great open source tools like https://github.com/securing/DumpsterDiver, that search volumes of data to check if it includes secrets in it.

We have found 50 million publicly open files, with a discovery rate of 25k publicly open files every day.

Of course, we did not retain any of the screened files and permanently deleted them after the check was done.

Our Findings

Personally Identifiable Information (PII)

Based on our research, we found about 2.5 million records and files that included information about individuals – see Fig. 2. This data is often called personally identifiable information (PII) and is protected by specific laws. This may include a person’s name, gender, age, SSN(American ID) and phone number, mail and living address, employees ID’s, salaries, applications for employments and internal email conversations.

Figure 2: A redacted employment application form

Sensitive Personal Information (SPI)

This data group contains certain categories of more sensitive data, such as personal health information, credit cards and financial data.

Personal Health Information

Health data should be subject to additional safeguards due to the sensitive nature of the patient’s personal details. Cybercriminals are specifically interested in this information because they may access a significant amount of personally identifiable information about an individual from a single record, that can be used or sold.

We found about 2,300 files related to individuals’ health status.

Financial Data

These files contain credit card numbers, companies’ incomes, loss and profit data, stocks, market value and more finance-related data. The sensitivity of this type of data is obvious and we found about 2,000 files containing finance information.

Confidential Information (CI)

This contains all non-public, business-related information.

Invoices

You might not think invoices are a big deal, but they can contain personally identifiable data as well as payment and credit card information.

In addition, there is another risk in putting invoices publicly opened on the internet – each one exposes information about the service provider company allowing malicious hackers or competing companies to infer internal sales data. As part of this research, we found almost one million invoice files.

Figure 3: Example of an invoice (redacted)

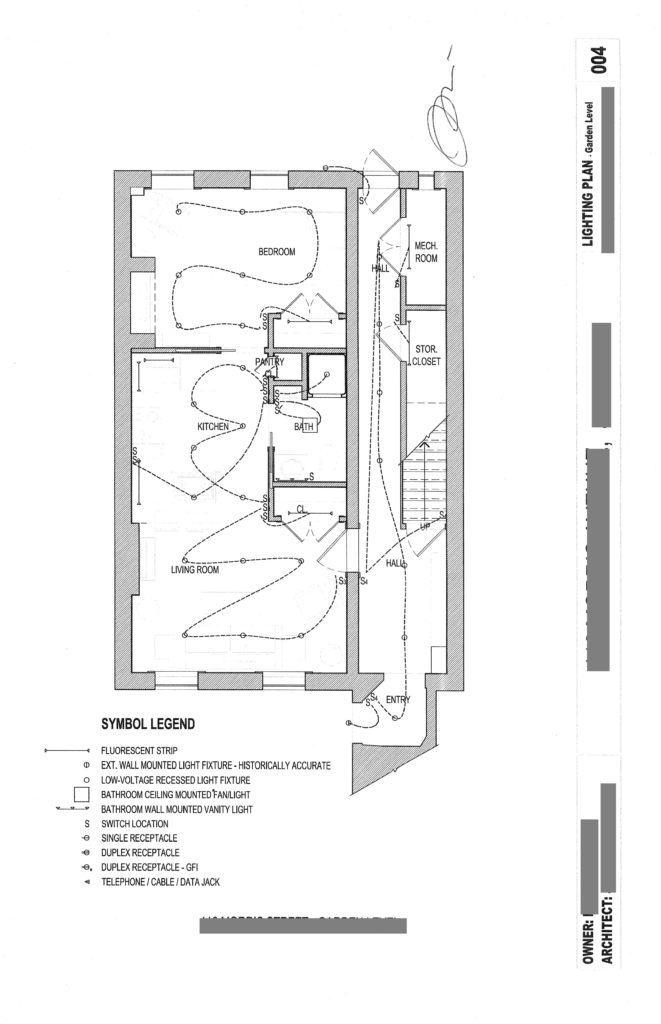

Agreements, Contracts, and Assets Structure Plans

We also found several corporate contracts and structure plans which included individuals and companies’ digital and physical signatures as well as (in the case of the structure plans) asset owner, architect and detailed plans like floor, power and demolition. With this data, attackers could plan a well-designed break-in, exploit one’s electricity and do more malicious actions.

During the research, we found almost one million such data files.

Figure 4: Structure lighting plan (redacted)

Log Files

The point of a log file is to keep track of what’s happening behind the scenes. If something should happen within a complex system, you have access to a detailed list of events that took place before the malfunction. They include whatever the application, server, or OS thinks needs to be recorded. Log files often contains secrets, passwords, or any other sensitive data about the system it was running on. Attackers can use this information to gain administrator access to all system data thanks to verbose logging that writes the administrator password to a world-readable file.

Our research found more than half a million log files.

Encryption Keys and Passwords

There are many file formats that can store encryption keys and passwords. A leak of one of those can put either companies and individuals at a huge risk.

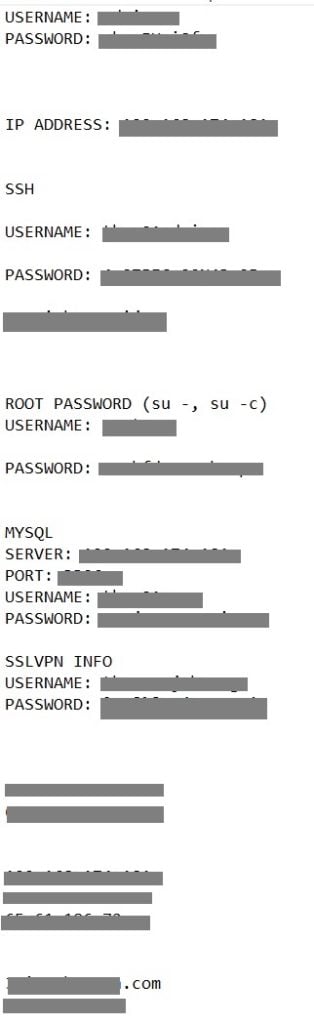

We found Encryption and firmware keys, SSH, SSLVPN, SMTP and MYSQL usernames and passwords, and more.

Figure 5: Raw text file containing usernames and passwords (redacted)

Introducing BlobHunter

This research was eye-opening in so many ways and truly highlighted the problem and inherent risk of misconfigured cloud storage systems. While we focused this research on Microsoft Azure, the risk is equally present in most cloud environments for that matter. We recommend everyone to look at the containers and files they are storing in any cloud system and make sure they have the correct access permissions. It’s crucial to operate according to the best practices that Microsoft published – “Security recommendations for Blob storage”:

https://docs.microsoft.com/en-us/azure/storage/blobs/security-recommendations

For helping the Azure customers, I wrote a new open-source tool called BlobHunter that iterates over your Azure subscriptions storage accounts and checks their file access levels.

It will produce a fully detailed CSV file of the Azure subscription open containers your Azure user has access to.

Please have a look at the tool README file on GitHub for more info about the tool.

The BlobHunter tool’s link:

https://github.com/cyberark/blobhunter

No one would debate the critical importance of cloud storage – it’s just critical that it is used in the most secure way possible. This blog post was intended to provide you with a better understanding and importance of proper access configuration for cloud-stored files. Most cloud providers including Microsoft give organizations the tools to secure their data, but as our research discovered, organizations don’t always put them into practice. Don’t take unnecessary risks with your sensitive data! Make sure your files are safe in the cloud, with the correct access levels. Get started with BlobHunter today!

Please note: Where possible, we notified all organizations where publicly opened sensitive files were found and advised that they provide appropriate access configurations or remove the file.