As we stand on the brink of the agentic AI revolution, it’s crucial to understand the profound impact AI agents will have on how people, applications and devices interact with systems and data. This blog post aims to shed light on these changes and the significant security challenges they bring.

It’s important to note that given the rapid pace of advancements in this field, we could not have anticipated many of the challenges discussed here just a few months ago. While not exhaustive, the examples highlighted in this blog reveal the dramatic shifts and potential risks associated with the widespread adoption of agentic AI.

Agentic AI: An Inescapable Reality for Enterprises

AI agents, or agentic AI, refer to autonomous systems designed to perform tasks or make decisions on behalf of users. These agents can perceive their environment, process information and take actions to achieve specific goals. By leveraging advanced algorithms and machine learning, AI agents can adapt to new information and improve their performance over time, making them valuable tools for enhancing productivity and efficiency. Although AI agents are not widely used in major production environments today, analysts predict their rapid adoption due to their enormous benefits to organizations.

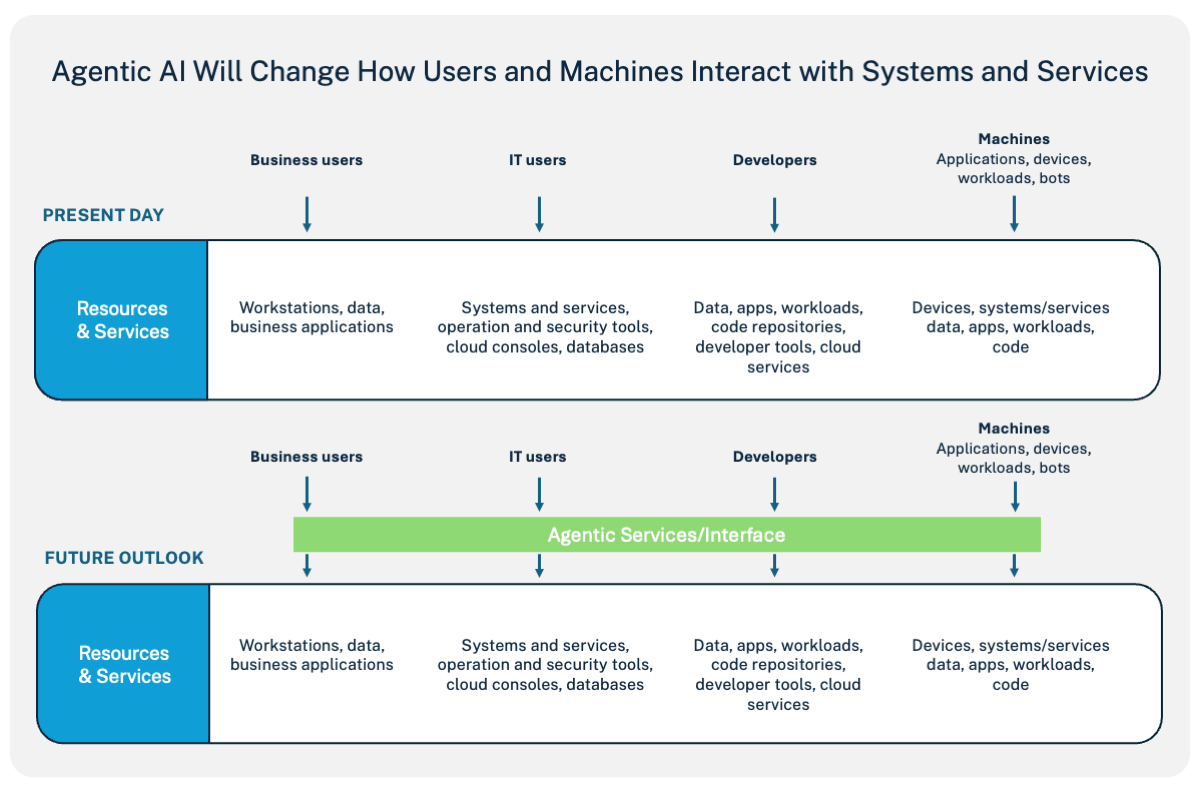

All identities, including business users, IT professionals, developers and even devices and applications, will start interacting with resources and services through a layer of agents.

Enterprises will utilize these agents in multiple ways. They might use out-of-the-box agents provided by operating systems, browsers and platforms or integrate agents into everyday tools like Microsoft Teams. Additionally, many companies will develop their own agents or use agents as a service provided by SaaS companies. Given their integration into daily activities and as agents become a new interface for any service—even the user’s operating system—avoiding agentic AI will be nearly impossible for most organizations.

Agentic AI: 5 Security Risks You Didn’t See Coming

As we navigate this inevitable integration, it’s critical to be aware of the security implications that come with it. The following are five security risks you might not have anticipated:

1. Humans and Their Workstations as Advanced Productivity Machines

Whether harnessing agentic capabilities provided by the workstation, the browser or SaaS applications (likely a combination of all), a user’s productivity could go through the roof. Agents will allow users to become managers of their own virtual teams that can operate interactively and autonomously. Whether an internal threat or external adversary, the risk profile of a compromised “regular” business user who heavily employs AI accelerates dramatically.

2. Shadow AI Agents: Unseen Autonomy

AI agents can be deployed autonomously behind the scenes by AI and development teams. Users can also deploy them through SaaS applications, operating system tools or the user’s browser. In all forms, we will see the adoption of agents without proper IT and security processes. There will likely be situations where IT has no visibility at all.

This lack of visibility poses significant security risks, as unauthorized AI agents could operate unchecked, introducing these risks in unexpected places. An appropriate term for these unauthorized and unseen AI agents is ‘Shadow AI agents,’ as they operate in the shadows without proper oversight.

3. Developers as Full-Stack R&D and Operations Departments

The introduction of generative AI has improved developer productivity. And now, AI agents can completely transform developers from individual contributors into comprehensive one-person R&D and operations departments. With the adoption of AI agents, developers can independently manage the entire end-to-end application development and maintenance process, including coding, integration, QA, deployment, production and troubleshooting.

With the introduction of AI Agents, developers’ productivity and responsibilities grow, leading to an increase in their level of privileges. Consequently, if a developer’s identity is compromised, the risk escalates dramatically, making it one of the most powerful identities in the enterprise.

4. Human-in-the-Loop: Risks and Impacts

As organizations adopt agentic AI, the human-in-the-loop process becomes critical in validating and ensuring agents perform as intended. These humans will have significant responsibilities, including approving exceptions and requests from agents. Human input will also influence the future behaviour of these self-learning AI agents.

Attackers may target these individuals to infiltrate the architecture, escalate privileges and gain unauthorized access to systems and data. The human-in-the-loop process is essential for maintaining control and oversight, but it also presents a significant vulnerability if these key individuals are compromised.

5. Managing Millions: AI Agent Oversight

Machine identities are proliferating at an unprecedented rate, now outnumbering human identities by as much as 45-to-1. And that could just be the tip of the iceberg. With projections like Jensen Huang’s vision for NVIDIA—50,000 humans managing 100 million AI agents per department—the ratio could skyrocket to over 2,000-to-1.

Millions of AI agents running in an enterprise environment make even more sense, as best practices for developing applications that use AI agents suggest breaking tasks off into multiple smaller specialized agents that work together to achieve a broader goal.

This exponential increase and sheer volume of machine identities pose significant challenges in managing and securing these identities.

The Future of Agentic AI Security

Organizations must ensure safe, compliant, and trusted deployments to safely deploy agentic AI at scale. Key requirements include full visibility into activities, strong authentication mechanisms, least privilege access, just-in-time (JIT) access controls and comprehensive session audit to trace actions back to their identities.

This is essential for both human and machine identities. As we navigate the complexities of agentic AI, these measures will be crucial in mitigating the associated security risks.

Yuval Moss, CyberArk’s vice president of solutions for Global Strategic Partners, joined the company at its founding.

Editor’s note: For a deeper dive into the transformative impact of AI agents on cybersecurity and automation, listen to CyberArk Labs’ Lavi Lazarovitz on the Security Matters podcast episode, “Building Trust in AI Agents.” Lavi shares valuable insights on AI-driven automation, security challenges and the importance of building trust in these systems. You can listen to the episode in the player below or on most major podcast platforms.