2025 marks a pivotal moment. It’s the year AI agents transition from experimental technology to an essential business objective in enterprise operations that can enable growth and scale. These digital counterparts extend human intelligence, redefine workflows and create a new frontier in automation, cybersecurity and decision-making.

Given these advancements, enterprises that strategically adopt AI agents early and proactively address cybersecurity may find themselves leading their industries through this shift, especially as agents begin autonomously collaborating with one another.

What Are AI Agents?

AI agents, essentially machine identities, are increasingly autonomous systems designed to perform tasks, make decisions and continuously learn from their interactions with the environment. Unlike traditional software, which relies on explicit human input at every step, AI agents operate independently by applying advanced models for reasoning, prediction and action execution. These models include reasoning engines, multimodal capabilities and other advanced AI frameworks that enable agents to analyze complex situations, adapt to new information and optimize their performance over time.

In an ideal world, AI agents act as an intelligent force multiplier, allowing enterprises to offload cognitive burdens while enhancing security, efficiency and scalability. We will delegate the worst parts of our jobs to AI—like distilling chaotic brainstorms into clear strategies, dynamically optimizing marketing campaigns without human intervention and identifying hidden patterns in massive datasets before we even know what to look for.

Key Characteristics of AI Agents:

- Autonomy: Execute tasks and make decisions without constant human intervention.

- Adaptability: Learn from past experiences and refine their performance over time.

- Proactivity: Anticipate needs, suggest actions and initiate processes before being explicitly prompted.

- Collaboration: Interact seamlessly with humans, other agents and enterprise systems.

What Exactly is an AI Agent (and What Isn’t)?

AI agents represent a significant evolution in computational intelligence, distinct from traditional automation systems and scripted chat interfaces. Unlike predefined workflows, AI agents operate as autonomous, adaptive entities that can perceive their environment, reason over complex inputs and iteratively refine their decision-making. This capability is underpinned by advanced machine learning architectures, including large language models (LLMs), reinforcement learning frameworks and multi-modal processing to generate dynamic responses, process complex inputs and improve performance based on feedback.

Understanding the distinctions between AI agents and adjacent technologies is crucial:

- AI automation: Deterministic, rule-based systems that execute predefined workflows with minimal variation. These systems operate on structured data and lack adaptive learning capabilities. An example is an invoice processing engine that applies static business rules to classify and approve payments.

- Chatbots: Dialog-driven systems optimized for natural language interaction within constrained parameters. While chatbots enhance accessibility and user engagement, they operate within predefined ontologies and lack autonomous decision-making.

- AI agents: Machine identities built for increasingly autonomous reasoning, capable of formulating goals, executing multi-step plans and refining their strategies based on feedback loops. These systems leverage generative models, causal inference techniques and multi-agent coordination to adapt to changing environments dynamically. Unlike traditional automation, AI agents generalize across tasks, synthesize multimodal information and optimize their objectives in real time while still requiring human oversight to ensure alignment and control.

The intelligence of AI agents emerges from their ability to integrate diverse data modalities, including text, images, sensor inputs and structured databases, while employing self-supervised learning, probabilistic reasoning and action-based reinforcement learning. This endows them with the capability to operate in open-ended, partially observable environments where explicit programming is infeasible.

Ultimately, AI agents will not merely be tools but autonomous collaborators, capable of iterative self-improvement, strategic problem-solving and dynamic adaptation.

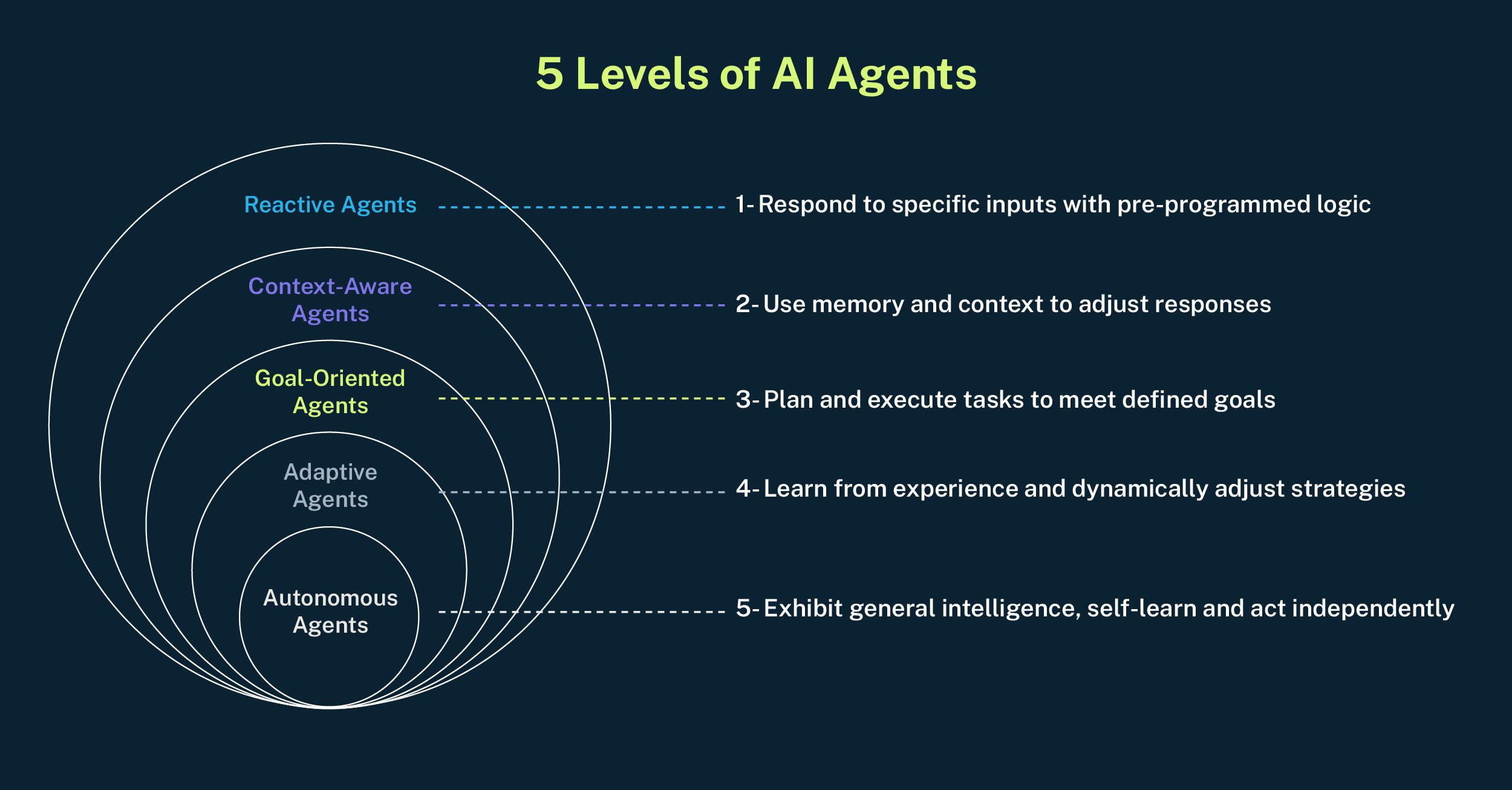

Understanding the 5 Levels of AI Agents

AI agents exist on a spectrum of capability and autonomy, ranging from basic tools to fully autonomous systems:

1. Reactive agents: Respond to predefined inputs with scripted logic.

2. Context-aware agents: Adjust responses based on memory and contextual understanding.

3. Goal-oriented agents: Plan and execute tasks to meet specific objectives.

4. Adaptive agents: Learn dynamically, refining strategies based on experience.

5. Autonomous agents: Exhibit general intelligence, self-learning and independent decision-making across complex scenarios.

The progression from reactive to autonomous agents reflects the growing sophistication of AI, moving closer to human-like intelligence and adaptability.

AI Agents: Extending Human Capabilities

AI agents will not just be tools but digital extensions of human intelligence. By replicating and enhancing cognitive functions, they enable humans to offload routine, time-consuming tasks and focus on strategic decision-making. This shift fundamentally changes how organizations work, interact and innovate.

Key Areas of Transformation:

- Automated decision-making: AI agents process vast datasets in real time, identifying patterns and making precise, timely decisions.

- Knowledge work automation: From research to strategic planning, agents handle tasks traditionally performed by humans.

- Digital representation: AI agents can autonomously act on behalf of businesses or individuals in digital interactions, managing communications, negotiations and engagements.

As AI agents evolve, they blur the boundary between human and machine intelligence, creating a collaborative paradigm in which humans focus on creativity and strategy while agents handle execution.

The Paradigm Shift: AI Agents Replacing Core Functions

Soon (Q3-Q4 of 2025), we anticipate seeing the first real-world applications of level 4 agent systems replacing core business, security and decision-making functions. Organizations are redesigning operational structures to integrate AI agents as primary decision-makers and execution engines.

Driving Factors of Adoption:

- Efficiency: AI agents perform tasks faster and cost-effectively than their human counterparts. These 24-hour workers never sleep or take coffee breaks.

- Data-driven accuracy: By utilizing vast datasets, agents make decisions with unparalleled precision, achieving inhuman capacities.

- Reliability and trust: As models advance, they will be able to eliminate human errors and ensure consistent and optimized performance.

Industries Leading the Way:

- Finance: AI agents manage trading algorithms, fraud detection and compliance monitoring.

- Healthcare: AI agents assist in diagnostics, personalized treatment plans and administrative automation.

- Cybersecurity: AI agents strengthen identity security, automating threat detection and mitigating real time risks.

How AI Agents Use Reasoning Models

Reasoning models will increasingly define AI agents, providing structured frameworks that enable them to evaluate options, predict outcomes and autonomously execute decisions. Unlike static rule-based systems, modern AI agents leverage probabilistic reasoning, reinforcement learning and multimodal data processing to refine their decision-making dynamically.

- Perception: Agents collect raw data through sensors, APIs, structured databases and multimodal inputs like text and images.

- Interpretation: AI models extract meaning from raw inputs using deep learning, probabilistic inference and retrieval-augmented generation (RAG).

- Decision-making: Agents evaluate potential actions through causal inference, probabilistic modeling and reinforcement learning, optimizing responses based on real time feedback.

- Execution: After selecting an action, the agent autonomously carries out its decision, whether responding to a query, automating workflows or orchestrating multi-step processes.

- Adaptation: AI agents continuously improve by integrating feedback loops and meta-learning to refine their future decision-making.

As AI reasoning models evolve, integrating smaller, optimized models will drive more accessible, cost-efficient and scalable AI agents, pushing the boundaries of autonomy while maintaining computational efficiency.

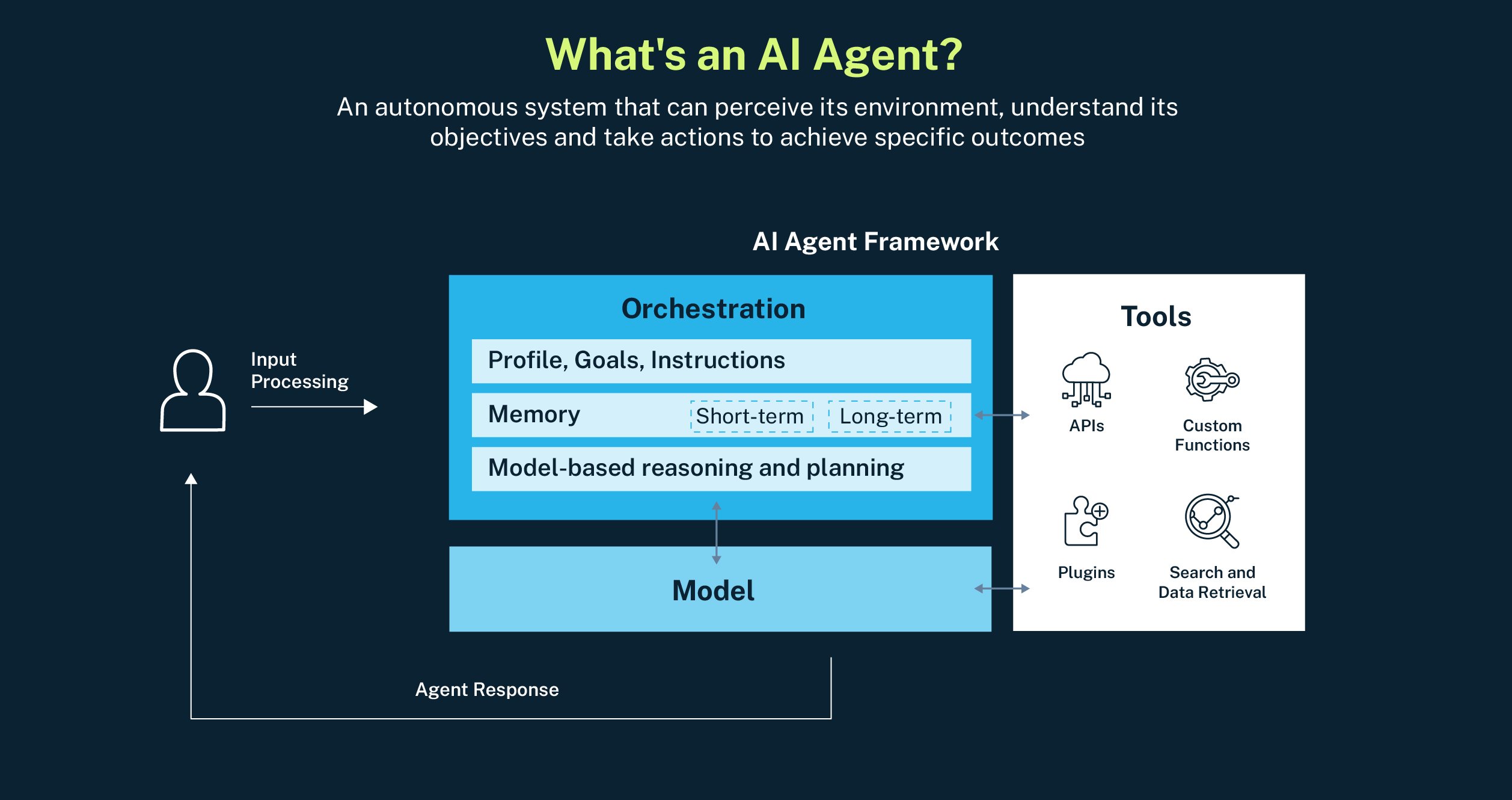

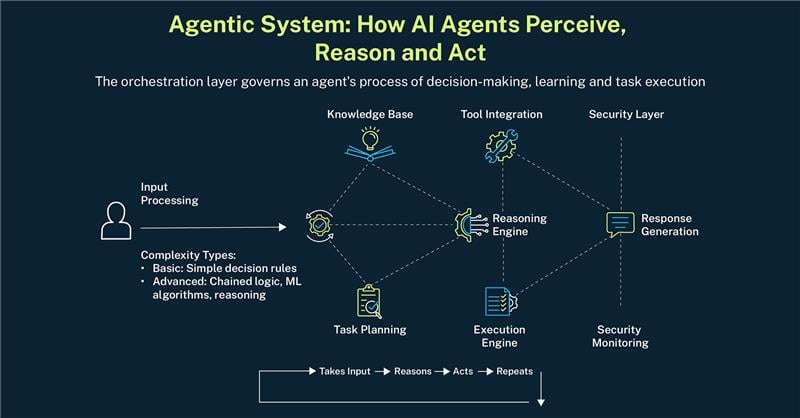

Orchestrating Digital Intelligence with the Agentic System

AI agents operate within an agentic system, where orchestration layers govern their actions, interactions and learning processes. The system ensures that agents remain aligned with organizational objectives while executing tasks with structured autonomy

Key Components of the Agentic System:

- Hierarchical Orchestration: High-level planning agents set objectives, while executor agents refine and execute tasks.

- Memory management: Balancing short-term and long-term data retention for contextual awareness.

- Reasoning: Leveraging advanced models to make decisions and plan actions.

- Autonomy Spectrum: Agents range from reactive (task-based) to adaptive (self-optimizing), with feedback loops guiding iterative improvement.

- Feedback loop: Monitoring outcomes to refine and improve performance.

This system enables agents to take inputs, reason through complexities, act and iterate—creating a seamless optimization cycle.

The Collaborative Future of AI Agents

The rise of AI agents marks the dawn of a new era in collaborative intelligence, where humans and machines join forces to achieve outcomes previously thought impossible. By leveraging the unique strengths of each, this partnership will redefine productivity, creativity and decision-making, setting the stage for the next chapter of the digital economy. Businesses and individuals who adopt and integrate AI agents today will play a pivotal role in shaping the innovations and ecosystems of tomorrow.

In 2025, AI agents are expected to become indispensable collaborators. And by 2026, these systems will act within the enterprise equipped with identity, full memory and autonomy. They will seamlessly act on our behalf, analyzing vast amounts of information (potentially processing terabytes of data daily), prioritizing our interests and automating complex workflows. As they become integral to personal and professional spheres, their capacity to learn, adapt and dynamically respond to changing environments will fundamentally transform how we approach challenges and opportunities.

Powered by advancements in computing, model innovation and collaborative intelligence, AI agents will transcend their role as tools, shaping a distinct operational identity, and evolving into proactive partners that enable a smarter, more efficient and interconnected world.

However, as these agents grow more autonomous and embedded in our daily lives, their expanded capabilities introduce critical security challenges. With full memory and access to sensitive data, potentially involving millions of transactions or interactions each day, they will become high-value targets for malicious actors. Protecting these systems will require robust security frameworks, including advanced authentication methods, privilege management and real time monitoring capable of analyzing thousands of events per second to detect and respond to emerging threats.

In this rapidly evolving landscape, the future of AI must be built on a foundation of trust and resilience, ensuring that these powerful agents can operate safely and securely while unlocking their transformative potential.

AI Agents and the Security Imperative

As these agents grow more autonomous and embedded in our daily lives, their expanded capabilities introduce new and unprecedented cybersecurity challenges. With full memory and access to sensitive data, potentially processing millions of transactions each day, AI agents will become high-value targets for cyberthreats, requiring a proactive security posture.

Ensuring cybersecurity resilience and trust in these systems will be as important as technological innovation itself.

Noga Shachar Schleyer is the director of AI GTM Strategy at CyberArk.

Editor’s note: For a deeper dive into the transformative impact of AI agents on cybersecurity and automation, listen to CyberArk Labs’ Lavi Lazarovitz on the Security Matters podcast episode, “Building Trust in AI Agents.” Lavi shares valuable insights on AI-driven automation, security challenges and the importance of building trust in these systems. You can listen to the episode in the player below or on most major podcast platforms.